One of the most commonly asked questions during data science interviews is about overfitting and underfitting. A recruiter will probably bring up the topic, asking you to define the terms and explain how to deal with them.

In this article, we’ll address this issue so you aren’t caught unprepared when the topic comes up. We will also show you an overfitting and underfitting example so you can gain a better understanding of what role these two concepts play when training your models.

What Are Overfitting and Underfitting?

Overfitting and underfitting occur while training our machine learning or deep learning models – they are usually the common underliers of our models’ poor performance. These two concepts are interrelated and go together. Understanding one helps us understand the other and vice versa.

Overfitting

Broadly speaking, overfitting means our training has focused on the particular training set so much that it has missed the point entirely. In this way, the model is not able to adapt to new data as it’s too focused on the training set.

Underfitting

Underfitting, on the other hand, means the model has not captured the underlying logic of the data. It doesn’t know what to do with the task we’ve given it and, therefore, provides an answer that is far from correct.

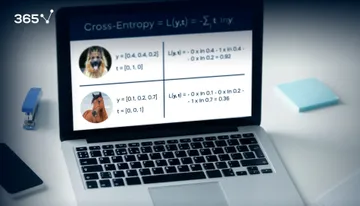

As the two main types of supervised learning are regression and classification, we will take a look at two examples based on both of them in order to show you what the difference between overfitting and underfitting is.

Overfitting and Underfitting. A Regression Example

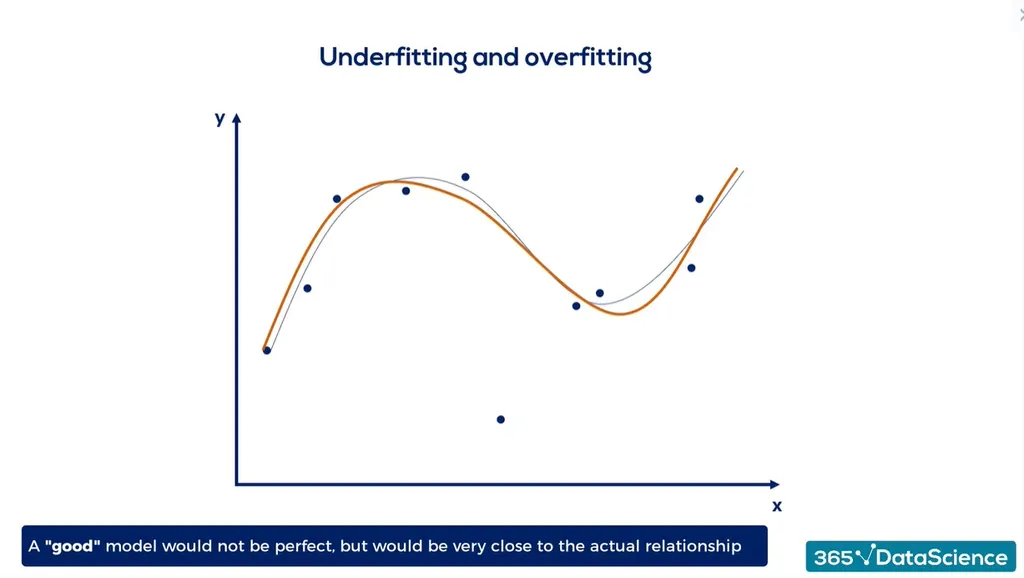

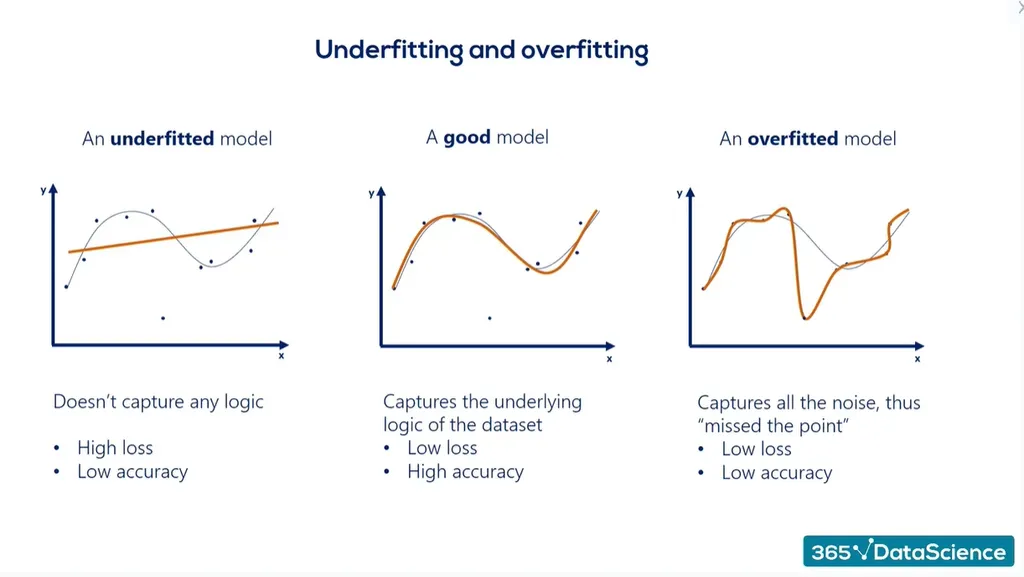

For starters, we use regression to find the relationship between two or more variables. A good algorithm would result in a model that, while not perfect, comes very close to the actual relationship:

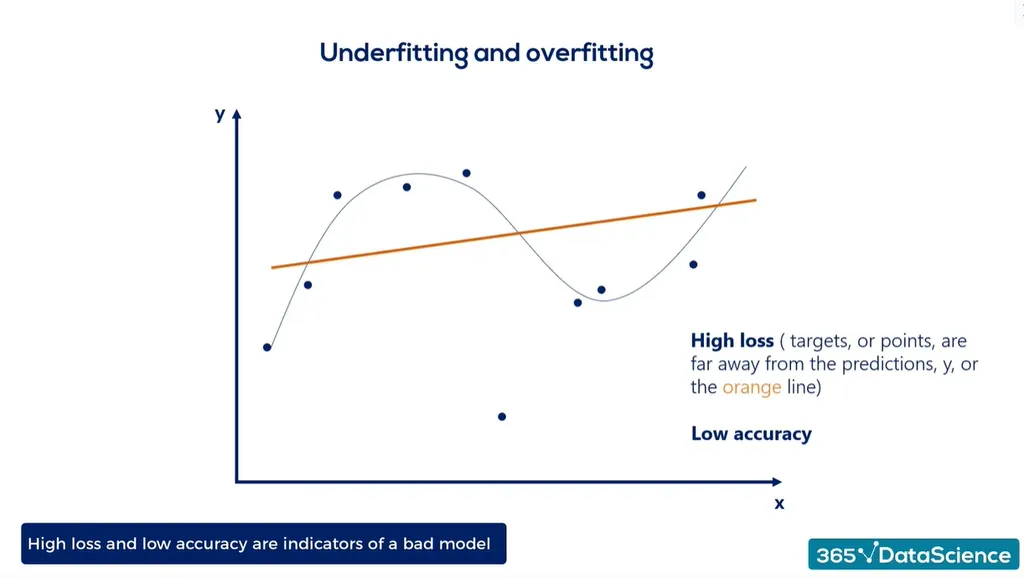

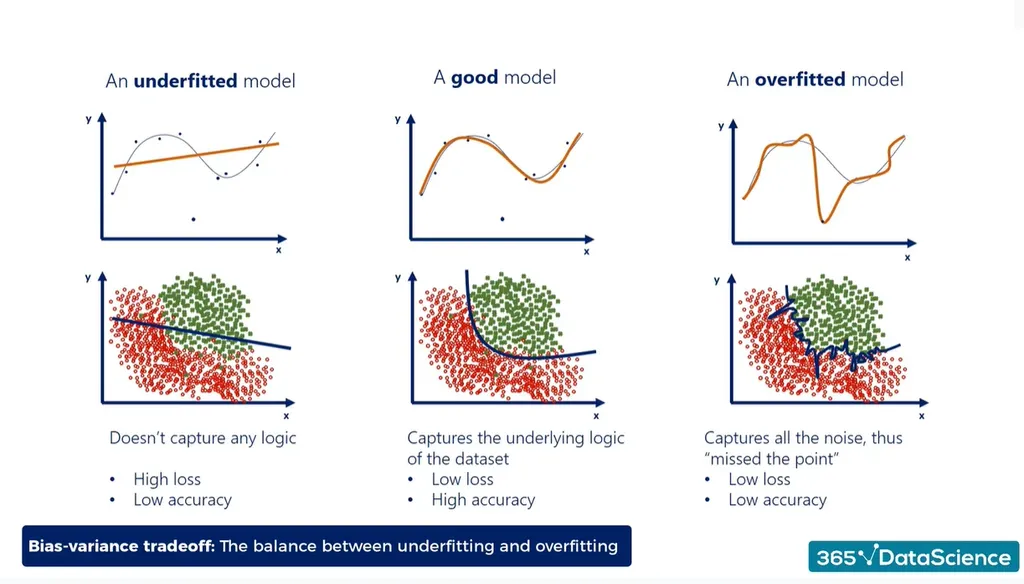

We can certainly say a linear model would be an underfitting model. It provides an answer, but does not capture the underlying logic of the data. Essentially, it doesn’t have strong predictive power:

In addition, underfitted models are clumsy. They have high costs in terms of high loss functions, meaning that their accuracy is low – not exactly what we’re looking for. In such cases, you quickly realize that either there are no relationships within our data or, alternatively, you need a more complex model.

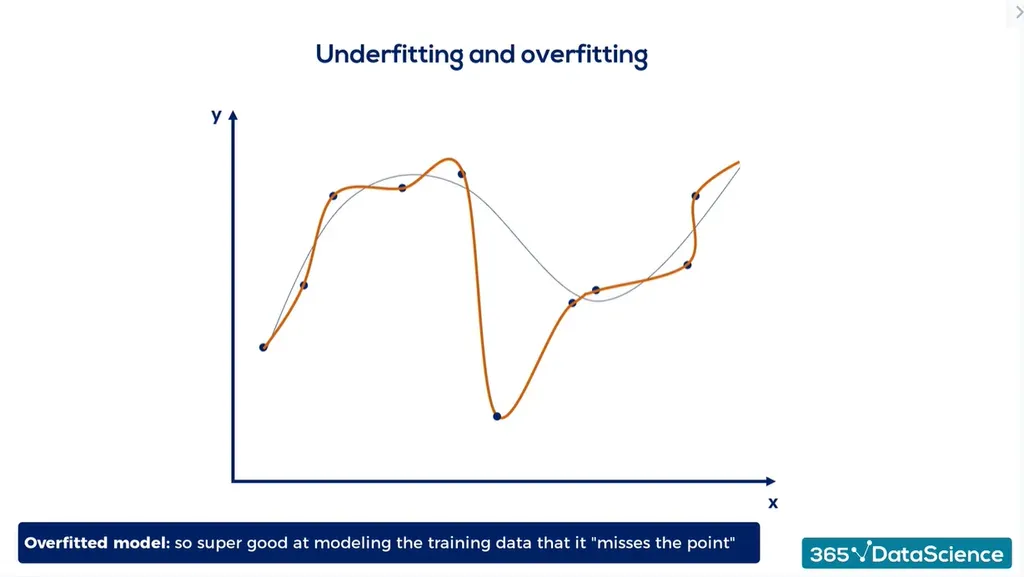

This, on the other hand, is an example of an overfitted model:

Overfitted models are so good at interpreting the training data that they fit or come very near each observation, molding themselves around the points completely. It seems like a desirable outcome at first glance, doesn’t it? The problem with overfitting, however, is that it captures the random noise as well. What this means is that you can end up with excess data that you don’t necessarily need.

For instance, imagine you are trying to predict the euro to dollar exchange rate, based on 50 common indicators. You train your model and, as a result, get low costs and high accuracies. In fact, you believe that you can predict the exchange rate with 99.99% accuracy.

Confident with your machine learning skills, you start trading with real money. Unfortunately, most orders you place fail miserably. In the end, you lose all your savings because you trusted the amazing model so much that you went in blindly.

What actually happened with your model is that it probably overfit the data. It can explain the training data so well that it missed the whole point of the task you’ve given it. Instead of finding the dependency between the euro and the dollar, you modeled the noise around the relevant data. In this case, said noise consists of the random decisions of the investors that participated in the market at that time.

But shouldn’t the computer be smarter than that?

Well, we explained the training data well, so our outputs were close to the targets. The loss function was low and the learning process worked like a charm in mathematical terms. However, once we go out of the training set and into a real-life situation, we see our model is actually quite bad.

However, we can’t fully blame the machine for this. The first rule of programming states computers are never wrong – the mistake is on us. We must keep issues as overfitting and underfitting in mind and take care of them with the appropriate remedies.

As a whole, overfitting can be quite tricky. You probably believe that you can easily spot such a problem now, but don’t be fooled by how simple it looks. Remember that there were 50 indicators in our examples, which means we need a 51-dimensional graph while our senses work in 3 dimensions only.

Underfitting and Overfitting. A Classification Example

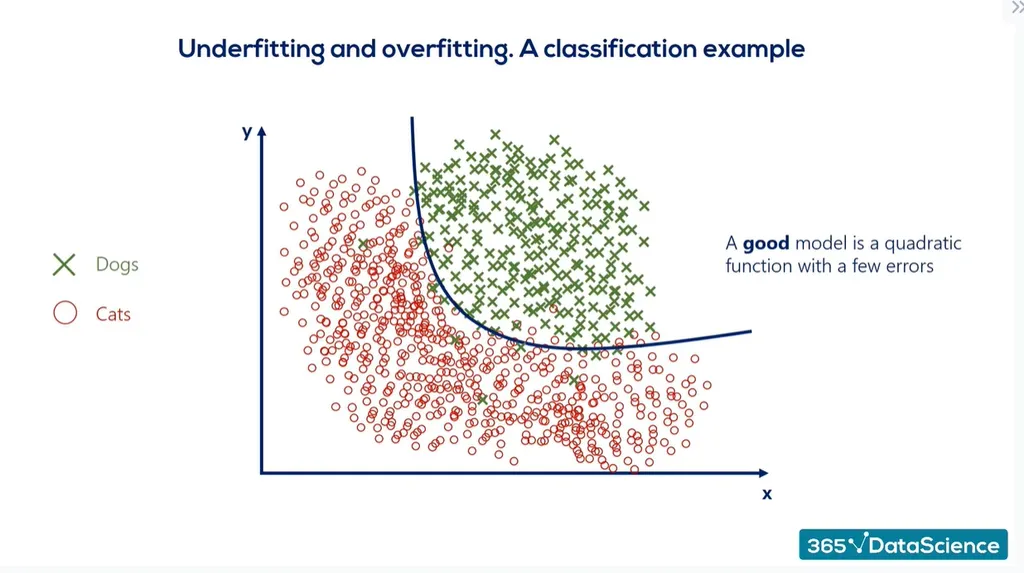

Suppose that there are two categories in dataset – cats and dogs. A good model that explains all the data, looks like a quadratic function with a few errors:

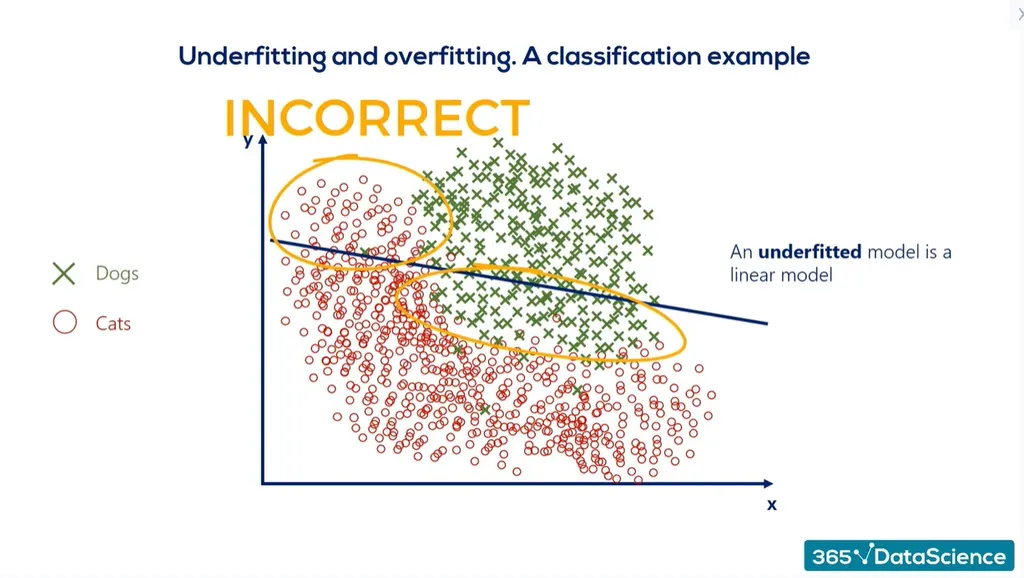

Following the same logic from our previous example, what would be considered an underfitted model? Well, we can say that a linear model fits the description as these types tend to be not very smart. Often, a simple linear model underfits if the data is not transformed. Only around 60% of the observations would be classified correctly with an underfitted model:

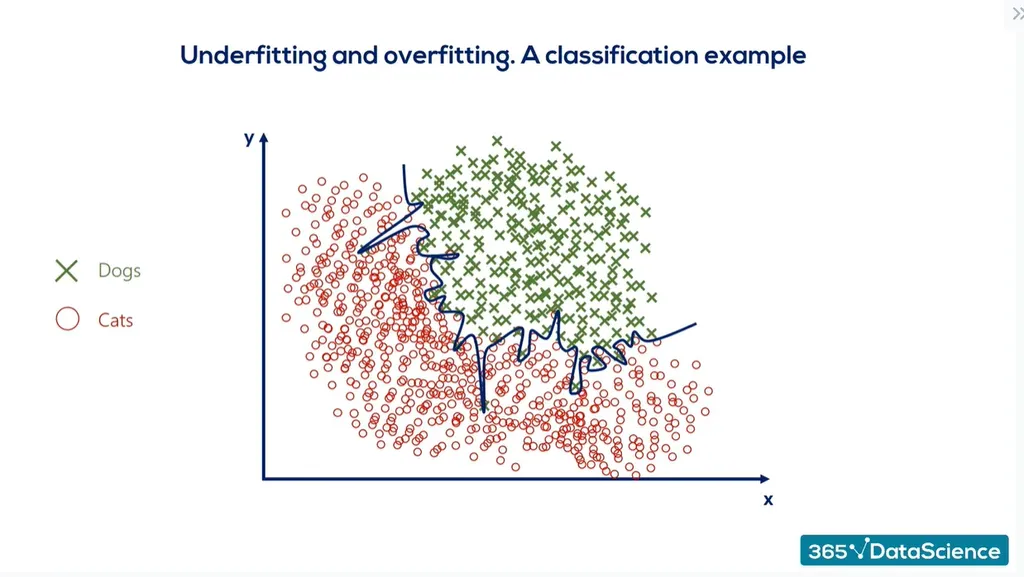

But would an overfitted model classify the observations perfectly?

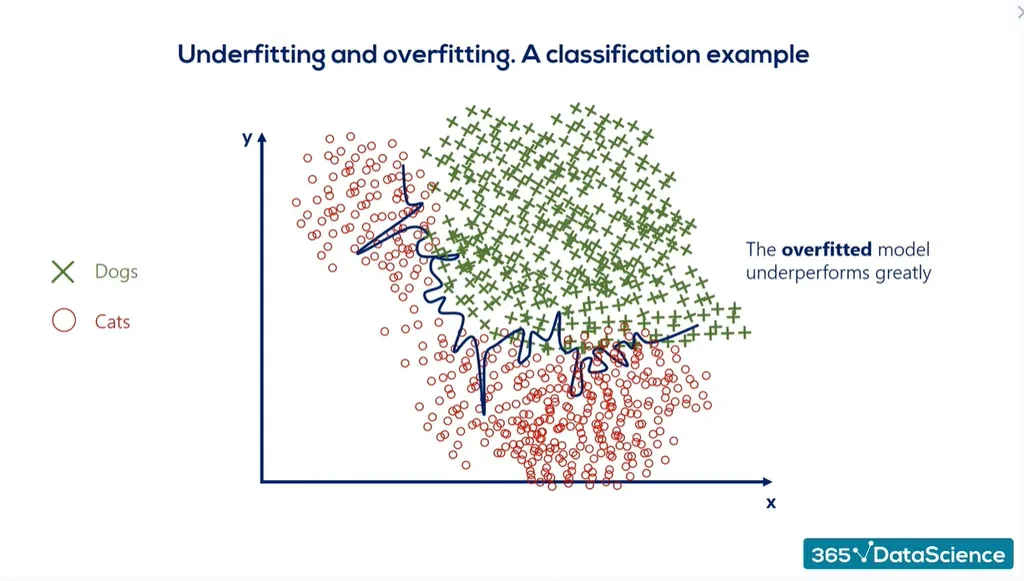

As we can see, it has correctly identified all the cat and dog photos in the dataset. But, once we give it a different dataset, the model will perform poorly even if it follows the same quadratic function logic:

We already mentioned how well the model can wrap itself around the training data – which is what happened here – and it will completely miss the point of the training task. Overfitting prevents our agent from adapting to new data, thus hindering its potential to extract useful information.

What Is a Well-trained Model?

As we’ve already mentioned, a good model doesn’t have to be perfect, but still come close to the actual relationship within the data points.

Moreover, a well-trained model, ideally, should be optimized to deal with any dataset, producing a minimal number of errors and maximum percent accuracy. It’s a fine balance that lies somewhere between underfitting and overfitting. This is often called the bias-variance tradeoff.

Overfitting Through the Eyes of Internet Culture

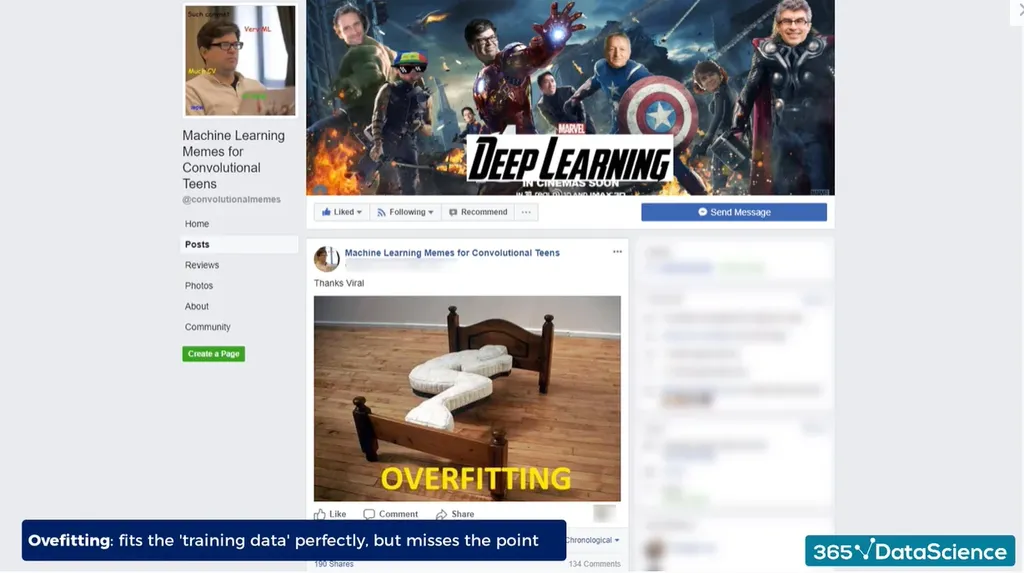

If you are a meme fan, there’s this Facebook page called Machine Learning Memes for Convolutional Teens. Some time ago, they posted a photo that beautifully exemplifies overfitting:

This bed might fit some people perfectly, but, on average, it completely misses the point of being a functioning piece of furniture.

As stranger as it may seem, memes are an interesting way to check your knowledge – if you find a meme funny, you most probably understand the concept behind it.

Overfitting vs Underfitting: Next Steps

Overfitting and underfitting are commonplace issues that you are sure to encounter during your machine learning or deep learning training. It’s important to understand what these terms mean in order to spot them when they arise. Building a good model takes time and effort which includes dealing with issues like these and performing balancing acts as you optimize your project. This also involves lots of study and practice to improve your skillset. Ready to dive deeper into both theory and practice and learn how to build well-trained models? Try our Deep Learning Course with TensorFlow 2.