Kiram A.

See all reviews

Learn to evaluate AI systems beyond accuracy. This hands-on course covers practical metrics, real-world case studies, and responsible evaluation strategies for chatbots, RAG models, and beyond.

Skill level:

Duration:

CPE credits:

Accredited

Bringing real-world expertise from leading global companies

Athens University of Economics and Business

Description

Welcome to this practical, insight-driven course on evaluating AI agents, where metrics meet real-world impact.

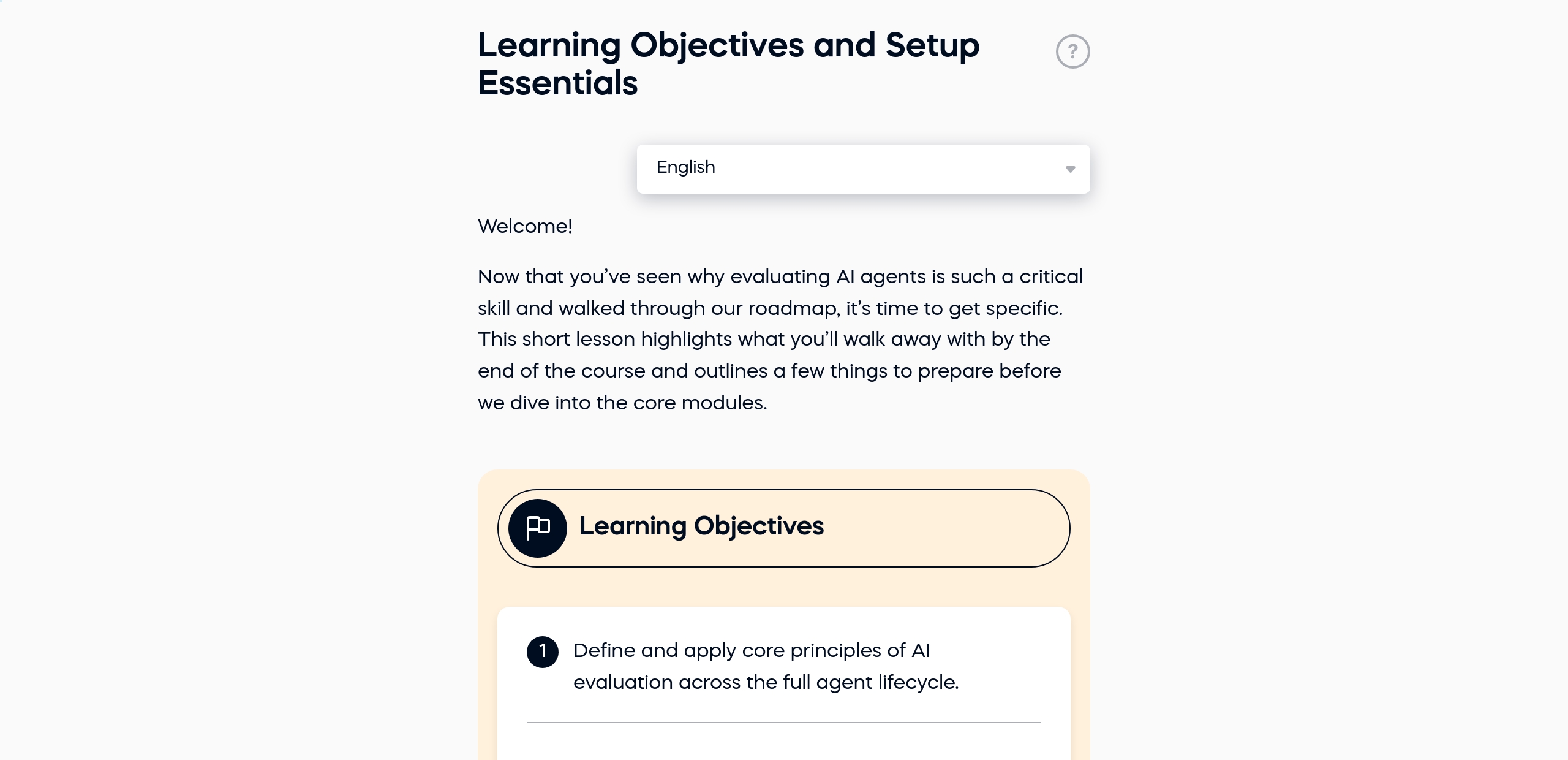

You’ll explore what it really means to measure AI performance from basic accuracy and precision to advanced concepts like Goal Success Rate, Context Recall, and Human-in-the-Loop evaluation. We’ll break down both quantitative and qualitative approaches to assess models in natural language processing, classification, retrieval-augmented generation (RAG), and more.

Through hands-on examples, industry-informed cases, and real-world failures, you’ll learn how to evaluate chatbots, recommendation systems, face detection tools, and lifelong learning agents. You'll also uncover how fairness, explainability, and user feedback shape truly responsible AI.

By the end, you'll have the tools and mindset to go beyond the leaderboard and design evaluations that actually matter in production. Whether you're an AI developer, product manager, or researcher, this course helps you confidently bridge metrics with meaning.

Let’s get started and redefine how we evaluate AI, one agent at a time.

Curriculum

Explore what AI evaluation really means. Learn core metrics like precision, recall, and F1, and why qualitative evaluation is just as critical.

Explore what AI evaluation really means. Learn core metrics like precision, recall, and F1, and why qualitative evaluation is just as critical.

Dive into key metrics for generative and classification-based agents. Understand industry benchmarks and practice calculating metrics in Python.

Dive into key metrics for generative and classification-based agents. Understand industry benchmarks and practice calculating metrics in Python.

Uncover the unique challenges of evaluating large language models (LLMs) and how to assess chatbot effectiveness and task performance.

Uncover the unique challenges of evaluating large language models (LLMs) and how to assess chatbot effectiveness and task performance.

Learn how to evaluate retrieval-augmented generation (RAG) systems from both retriever and generator perspectives, including coding exercises and human-in-the-loop strategies.

Learn how to evaluate retrieval-augmented generation (RAG) systems from both retriever and generator perspectives, including coding exercises and human-in-the-loop strategies.

Focus on gathering and designing human feedback loops. Learn to build simple mechanisms and extract insights from qualitative data.

Focus on gathering and designing human feedback loops. Learn to build simple mechanisms and extract insights from qualitative data.

Evaluate AI systems responsibly. Learn how to identify bias, assess safety, and incorporate fairness using modern ethical frameworks and red teaming.

Evaluate AI systems responsibly. Learn how to identify bias, assess safety, and incorporate fairness using modern ethical frameworks and red teaming.

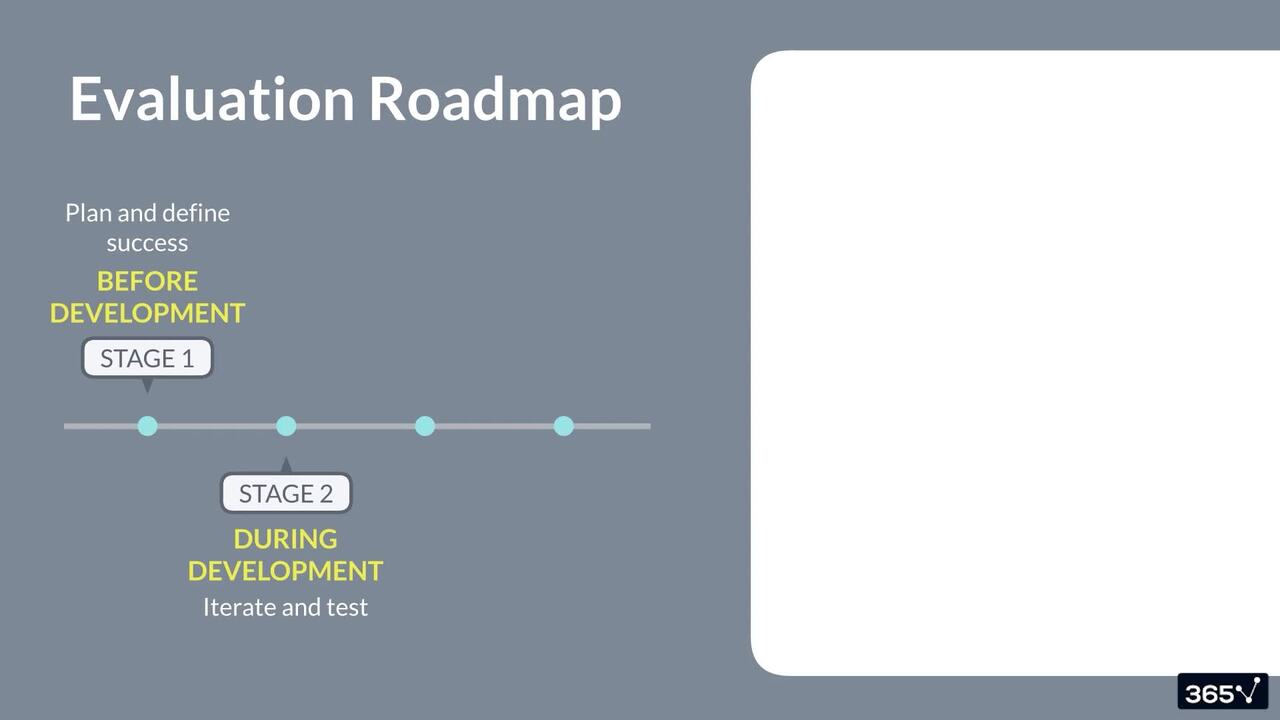

Build evaluation pipelines and explore how to improve systems post-launch. Look ahead at emerging methods for multi-agent systems and continuous evaluation.

Build evaluation pipelines and explore how to improve systems post-launch. Look ahead at emerging methods for multi-agent systems and continuous evaluation.

Apply everything you’ve learned in a final project. Present your evaluation strategy, reflect on your journey, and get inspired for next steps in AI development.

Apply everything you’ve learned in a final project. Present your evaluation strategy, reflect on your journey, and get inspired for next steps in AI development.

Free lessons

1.1 What This Course Covers

3 min

1.2 Why Evaluating AI Agents is Critical & Our Course Roadmap

3 min

1.3 Learning Objectives and Setup Essentials

2 min

2.3 The AI Evaluation Lifecycle: From Idea to Impact

3 min

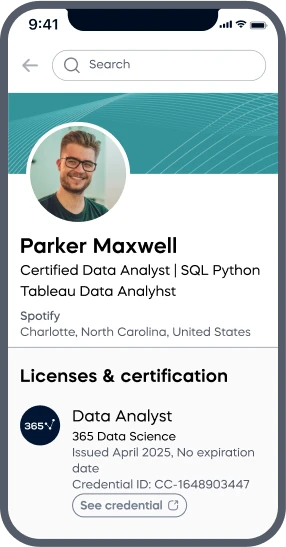

#1 most reviewed

9 in 10

of our graduates landed a new AI & data job

96%

of our students recommend

ACCREDITED certificates

Craft a resume and LinkedIn profile you’re proud of—featuring certificates recognized by leading global

institutions.

Earn CPE-accredited credentials that showcase your dedication, growth, and essential skills—the qualities

employers value most.

Certificates are included with the Self-study learning plan.

How it WORKS