Intro to LLMs

trending topic

Start your AI Engineer career journey: Master Transformer Architecture and the Essentials of Modern AI

Start for Free

Start for Free

What you get:

- 3 hours of content

- 19 Interactive exercises

- 7 Downloadable resources

- World-class instructor

- Closed captions

- Q&A support

- Future course updates

- Course exam

- Certificate of achievement

Intro to LLMs

trending topic

Start for Free

Start for Free

What you get:

- 3 hours of content

- 19 Interactive exercises

- 7 Downloadable resources

- World-class instructor

- Closed captions

- Q&A support

- Future course updates

- Course exam

- Certificate of achievement

$99.00

Lifetime access

Start for Free

Start for Free

What you get:

- 3 hours of content

- 19 Interactive exercises

- 7 Downloadable resources

- World-class instructor

- Closed captions

- Q&A support

- Future course updates

- Course exam

- Certificate of achievement

What You Learn

- Create your own AI-driven applications tailored to solve specific business problems

- Boost your career prospects by mastering AI Engineering skills

- Gain a solid understanding of key LLM concepts such as attention and self-attention, crucial for building intuitive AI systems

- Learn how to integrate Open AI’s API and create a bridge between your products and powerful AI foundation models

- Get an introduction to LangChain, the platform that streamlines the creation of AI-driven apps

- Explore HuggingFace to access the cutting-edge AI Engineering tools it offers

Top Choice of Leading Companies Worldwide

Industry leaders and professionals globally rely on this top-rated course to enhance their skills.

Course Description

Learn for Free

1.1 Introduction to the course

2 min

1.2 Course Materials and Notebooks

1 min

1.3 What are LLMs?

3 min

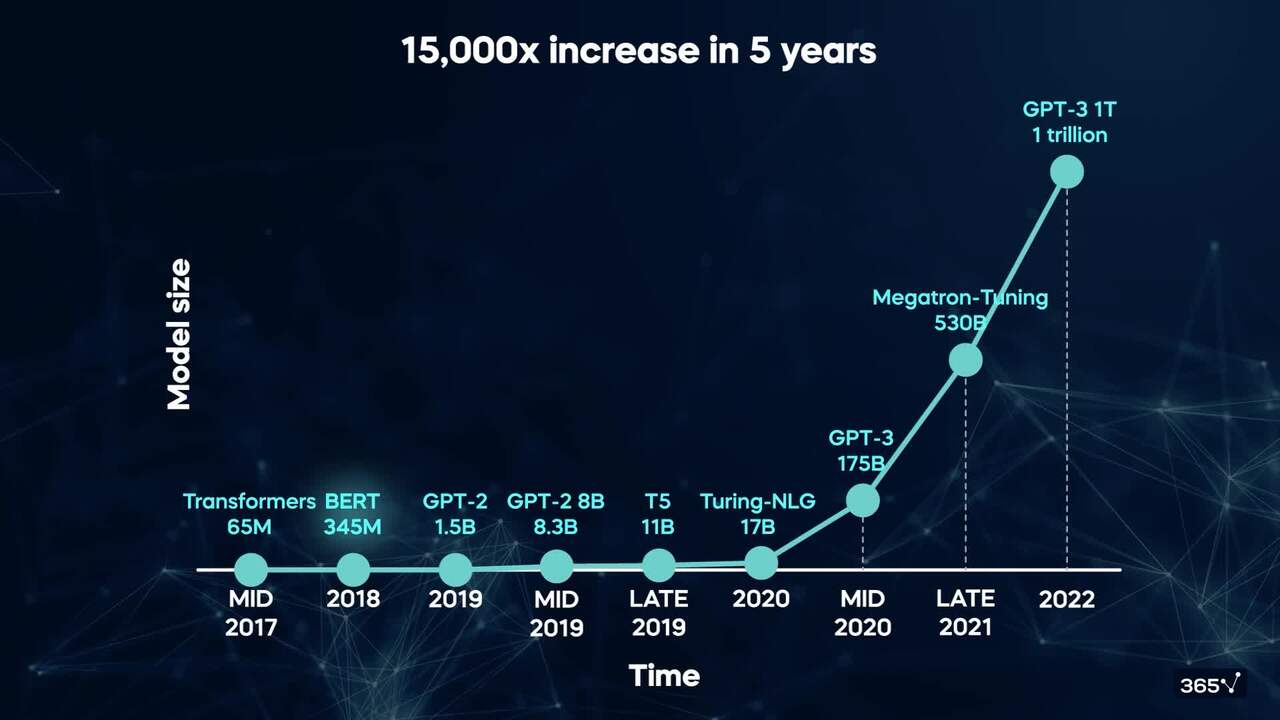

1.4 How large is an LLM?

3 min

1.5 General purpose models

1 min

1.6 Pre-training and fine tuning

3 min

Curriculum

- 2. The Transformer Architecture9 Lessons 24 MinIn this segment of the LLM course, we’ll break down the transformers' architecture and explain the mechanics behind encoders and decoders, embeddings, multi-headed attention, and the significance of a feed-forward layer. You’ll learn the advantages of transformers over RNNs.Deep learning recap3 minThe problem with RNNs4 minThe solution: attention is all you need3 minThe transformer architecture1 minInput embeddings3 minMulti-headed attention4 minFeed-forward layer3 minMasked multihead attention1 minPredicting the final outputs2 min

- 3. Getting started with GPT models10 Lessons 31 MinWe’ll examine GPT models closely and begin our practical part of the LLM tutorial. We’ll connect to OpenAI’s API and implement a simple chatbot with a personality: a poetic chatbot. I’ll also show you how to use LangChain to work with your own custom data, feeding information from the 365 web pages to our model.What does GPT mean?1 minThe development of ChatGPT2 minOpenAI API3 minGenerating text2 minCustomizing GPT Output4 minKey word text summarization4 minCoding a simple chatbot6 minIntroduction to Langchain in Python1 minLangchain3 minAdding custom data to our chatbot5 min

- 4. Hugging Face Transformers6 Lessons 27 MinThe Hugging Face package is an open-source package, which allows us an alternative way to interact with LLMs. We’ll learn about pre-trained and customized tokenizers and how to integrate Hugging Face into Pytorch and Tensorflow deep learning workflows.Hugging Face package3 minThe transformer pipeline6 minPre-trained tokenizers9 minSpecial tokens3 minHugging Face and PyTorch, TensorFlow5 minSaving and loading models1 min

- 5. Question and answer models with BERT7 Lessons 32 MinThis section of our Intro to Large Language Models course will explore BERT's architecture and contrast it with GPT models. It will delve into the workings of question-answering systems both theoretically and practically and examine variations of BERT—including the optimized RoBERTa and the smaller lightweight version DistilBERT.GPT vs BERT3 minBERT architecture5 minLoading the model and tokenizer2 minBERT embeddings4 minCalculating the response6 minCreating a QA bot9 minBERT, RoBERTa, DistilBERT3 min

- 6. Text classification with XLNet6 Lessons 26 MinIn the final Intro to Large Language Models course section, we’ll look under the hood of XLNET (a novel LLM), that uses permutations of data sets to train a model. We’ll also compare XLNet and our previously discussed models, BERT and GPT.GPT vs BERT vs XLNET4 minA note on the following lecture Read now1 minPreprocessing our data10 minXLNet Embeddings4 minFine tuning XLNet4 minEvaluating our model3 min

Topics

Course Requirements

- Highly recommended to take the Intro to Python course first

Who Should Take This Course?

Level of difficulty: Intermediate

- Aspiring data analysts, data scientists, data engineers, machine learning engineers, and AI engineers

Exams and Certification

A 365 Data Science Course Certificate is an excellent addition to your LinkedIn profile—demonstrating your expertise and willingness to go the extra mile to accomplish your goals.

Meet Your Instructor

Guided by a comprehensive social science and statistics background, Lauren's data science career has taken her through several pivotal roles—from creating custom NLP solutions for non-profits in Nepal to providing insights for BBC Sport and the 2020 Olympics. Lauren has spoken at several conferences on how NLP can benefit those in developing countries and advocates for ethical and open data science. She aims to empower individuals and organizations to make confident, data-driven decisions and to ensure AI is fair and accessible for all.

What Our Learners Say

365 Data Science Is Featured at

Our top-rated courses are trusted by business worldwide.