Machine Learning with K-Nearest Neighbors

Master K-Nearest Neighbors using Python’s scikit-learn library: from theoretical foundations to practical applications

Start for Free

Start for Free

What you get:

- 2 hours of content

- 3 Interactive exercises

- 8 Downloadable resources

- World-class instructor

- Closed captions

- Q&A support

- Future course updates

- Course exam

- Certificate of achievement

Machine Learning with K-Nearest Neighbors

Start for Free

Start for Free

What you get:

- 2 hours of content

- 3 Interactive exercises

- 8 Downloadable resources

- World-class instructor

- Closed captions

- Q&A support

- Future course updates

- Course exam

- Certificate of achievement

$99.00

Lifetime access

Start for Free

Start for Free

What you get:

- 2 hours of content

- 3 Interactive exercises

- 8 Downloadable resources

- World-class instructor

- Closed captions

- Q&A support

- Future course updates

- Course exam

- Certificate of achievement

What You Learn

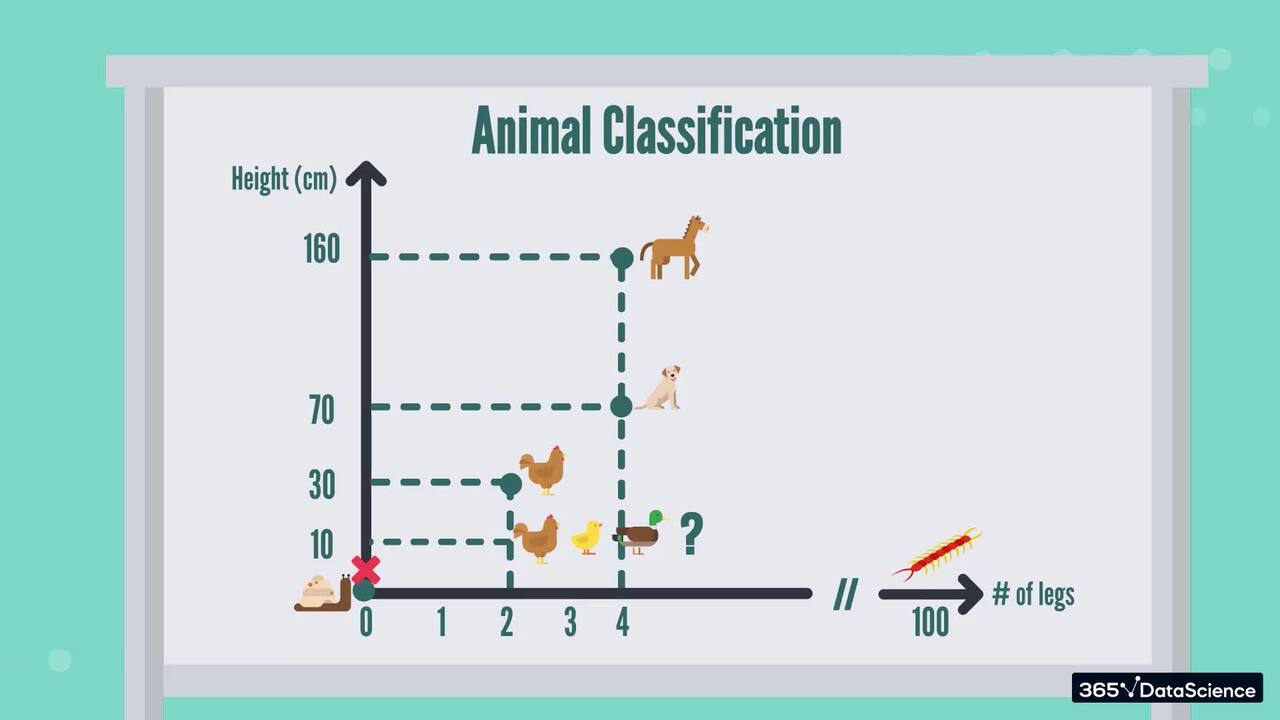

- Fully grasp the inner workings of the KNN algorithm as well as its practical application

- Get acquainted with different distance metrics

- Learn how to generate random datasets for practice

- Understand the pros and cons of the KNN algorithm to make informed decisions in model selection

- Develop the skills to independently plan, execute, and deliver a complete ML project from start to finish

Top Choice of Leading Companies Worldwide

Industry leaders and professionals globally rely on this top-rated course to enhance their skills.

Course Description

Learn for Free

1.1 What does the course cover?

7 min

1.2 Motivation

2 min

1.4 Math Prerequisites: Distance Metrics

4 min

2.1 Setting up the Environment

1 min

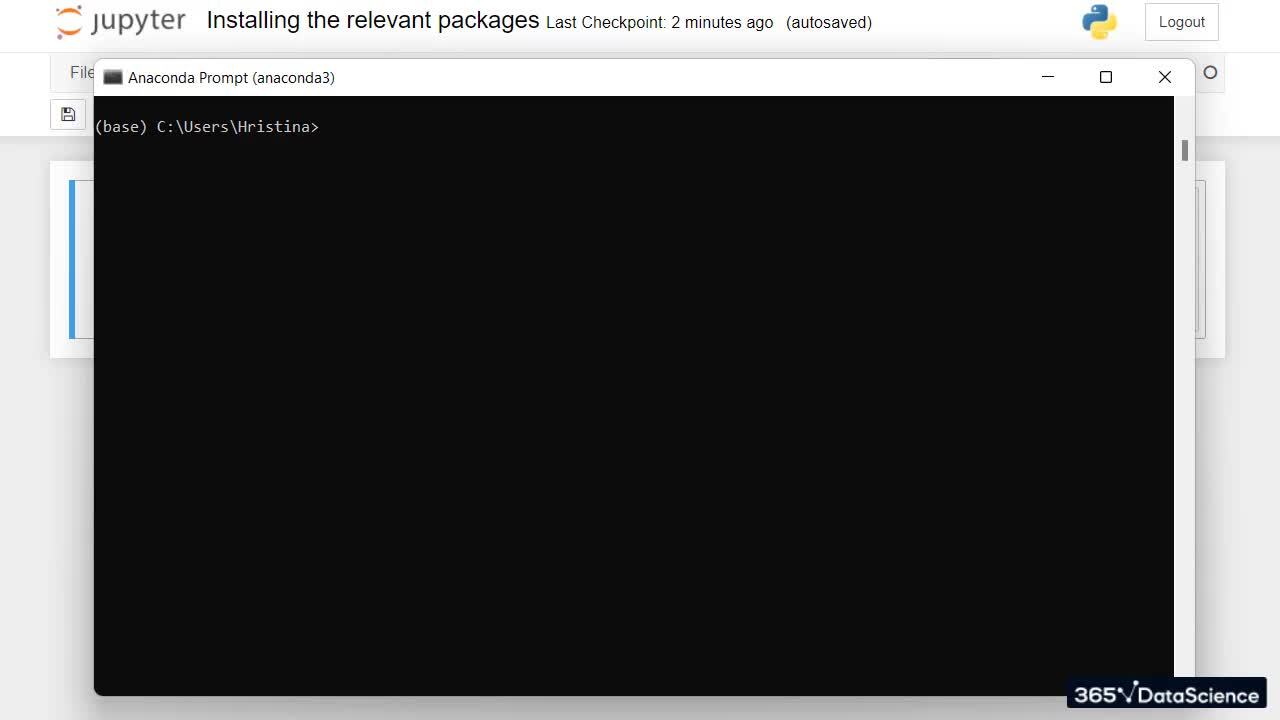

2.2 Installing the Relevant Packages

4 min

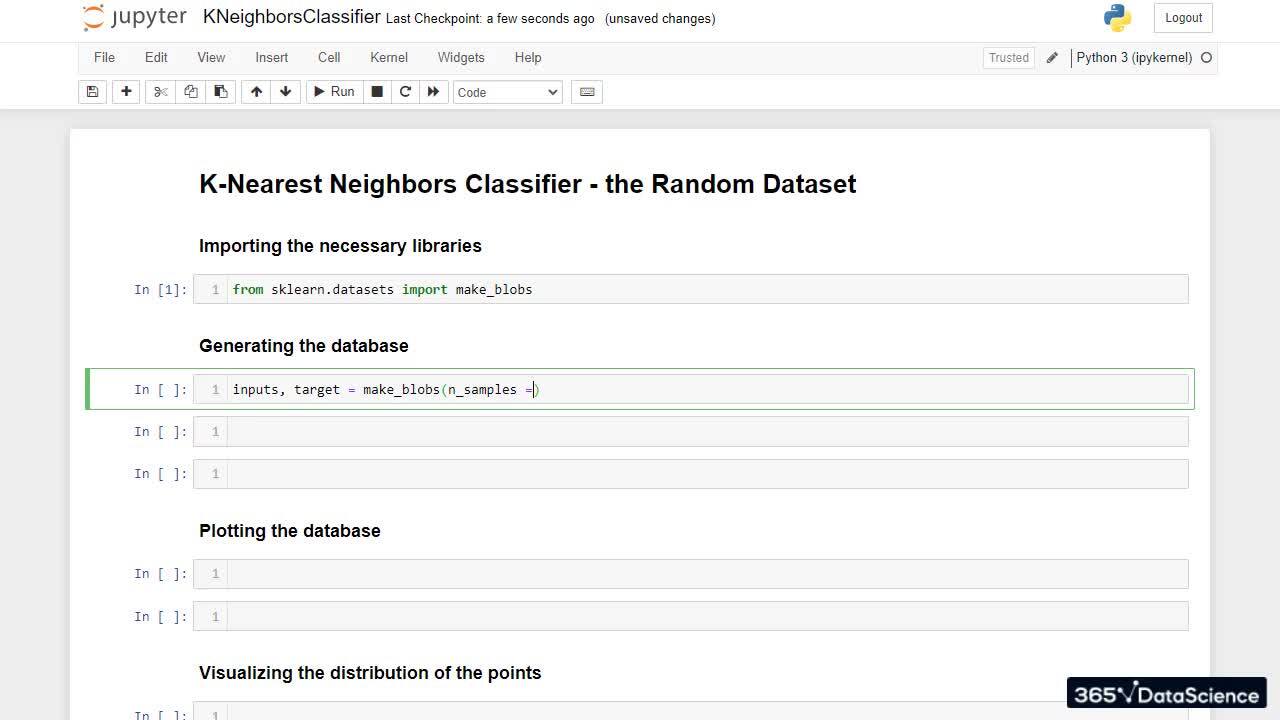

3.1 Random Dataset: Generating the Dataset

3 min

Curriculum

- 2. Setting up the Environment2 Lessons 5 MinIn advance of the hands-on part of the course, this section guides you through the installation process of relevant Python packages.Setting up the Environment Read now1 minInstalling the Relevant Packages4 min

- 3. KNN Classifier – Practical Example8 Lessons 37 MinTo apply your skills in practice, you will first learn how to generate a random set of points, distribute them into 3 classes, and place them on the coordinate system. We will then use this dataset to train and test a KNN classification algorithm with the help of Python’s scikit-learn library. We will look at some edge cases that can arise during the classification process and discover how to handle them. Next, we will guide you through the process of building the so-called decision regions, which are a great way of visualizing the performance of your model. Finally, we will find out how to choose the best model parameters using a technique called ‘grid search’.Random Dataset: Generating the Dataset3 minRandom Dataset: Visualizing the Dataset4 minRandom Dataset: Classification7 minRandom Dataset: How to Break a Tie3 minRandom Dataset: Decision Regions6 minRandom Dataset: Choosing the Best K-value5 minRandom Dataset: Grid Search5 minRandom Dataset: Model Performance4 min

- 4. KNN Regressor3 Lessons 19 MinContinuing the practical part of the course, we will dive into solving regression tasks using the K-Nearest Neighbors method. Similar to what we did in the previous section, we will tackle this problem by generating 2 random datasets. One would represent a linear problem, while the other would be non-linear. We will apply a linear (parametric) model and a KNN (non-parametric model) on both datasets and argue which one performs better.Theory with a Practical Example8 minKNN vs Linear Regression: A Linear Problem7 minKNN vs Linear Regression: A Non-linear Problem4 min

- 5. Pros and Cons of the KNN Algorithm1 Lesson 7 MinIn this final section of the course, the pros and cons of the KNN algorithm are discussed at length. We will study this method’s limitations, together with its strong sides.Pros and Cons7 min

Topics

Course Requirements

- You need to complete an introduction to Python before taking this course

- It is highly recommended to take the Machine Learning in Python course first

- You need to have Jupyter Notebook up and running

Who Should Take This Course?

Level of difficulty: Intermediate

- Aspiring data scientists and ML engineers

Exams and Certification

A 365 Data Science Course Certificate is an excellent addition to your LinkedIn profile—demonstrating your expertise and willingness to go the extra mile to accomplish your goals.

Meet Your Instructor

Hristina Hristova is a Theoretical Physicist with experience in the fields of mathematics, physics, programming, and the creation of various educational content. For several years now, she has been tutoring physics and mathematics students online, following educational programs such as The IB Diploma, Cambridge IGCSE, and Cambridge AS & A Level, among many others. Hristina’s high qualification and adaptive teaching style have helped plenty of students successfully pass their exams, while also enjoying the learning process.

What Our Learners Say

365 Data Science Is Featured at

Our top-rated courses are trusted by business worldwide.