Tech Infrastructure

Explore the Flashcards:

The composite hardware, software, network resources, and services required

for the existence, operation, and management of an enterprise IT environment.

Realtime Data

Information that is delivered immediately after collection, with no delay in the timeliness of the information.

Geological Data

Data related to the physical properties and location of rocks and minerals found within the Earth.

Data Analysis

The process of inspecting, cleansing, transforming, and modeling data to discover useful information and inform conclusions.

Data Mining

The analysis of large datasets and the attempt to discover meaningful insights and dependencies in these data sets.

Text Analytics

Analyses not only the content but also the sentiment and emotions of a text.

Categorization

Organizing different works in terms of characteristics.

Clustering

Grouping different texts together based on their genre.

Concept Extraction

Provide text which is most relevant to the task at hand.

Sentiment Assessment

Categorizing whether an opinion (in text form) is positive, negative, or neutral.

Image Analytics

The analysis of information contained in photographs, medical images, or graphics.

Video Analytics

The analysis of information contained in a video footage.

Voice Analytics

Obtains information from audio recordings of spoken content.

Predictive Analytics

The use of data, statistical algorithms, and machine learning techniques to identify the likelihood of future outcomes based on historical data.

Scenario Analytics (Horizon/Total Return Analytics)

An analytical framework that consists of building a model that shows how a final output would look like in several different cases.

It can be used to assess the possible realizations of different strategic choices or to create a combination of scenarios.

Scenario Planning

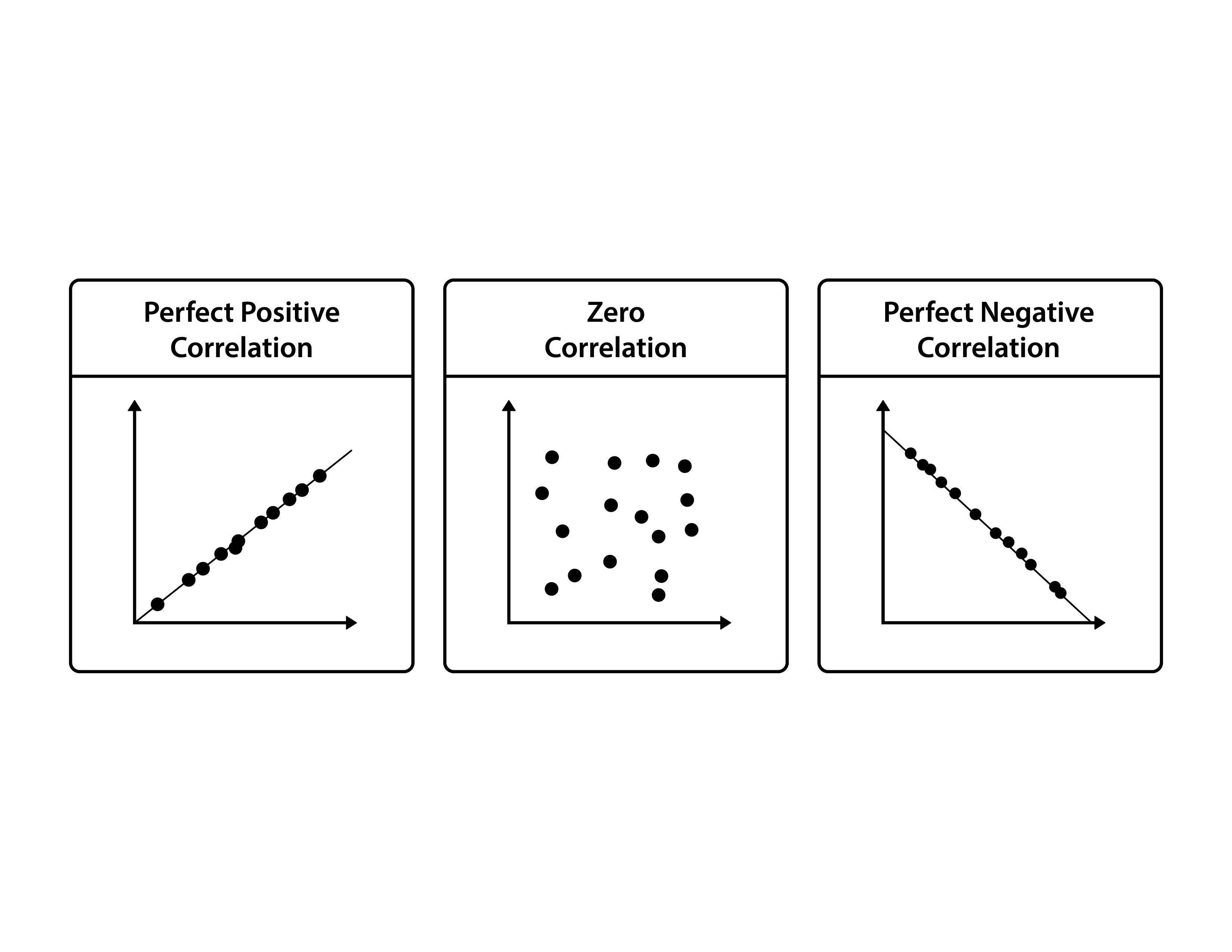

Correlation Analysis

A statistical technique aimed at establishing whether a pair of variables is related.

Correlation establishes a statistical relationship but does not prove causation.

Correlation Coefficient

Indicates of the strength of the relationship between two variables.

Multivariable Correlation Analysis

A statistical method used to understand the relationships between two or more variables, identifying how they change together.

Single Variable Correlation Analysis

A statistical method that measures the strength and direction of the linear relationship between one independent variable and one dependent variable.

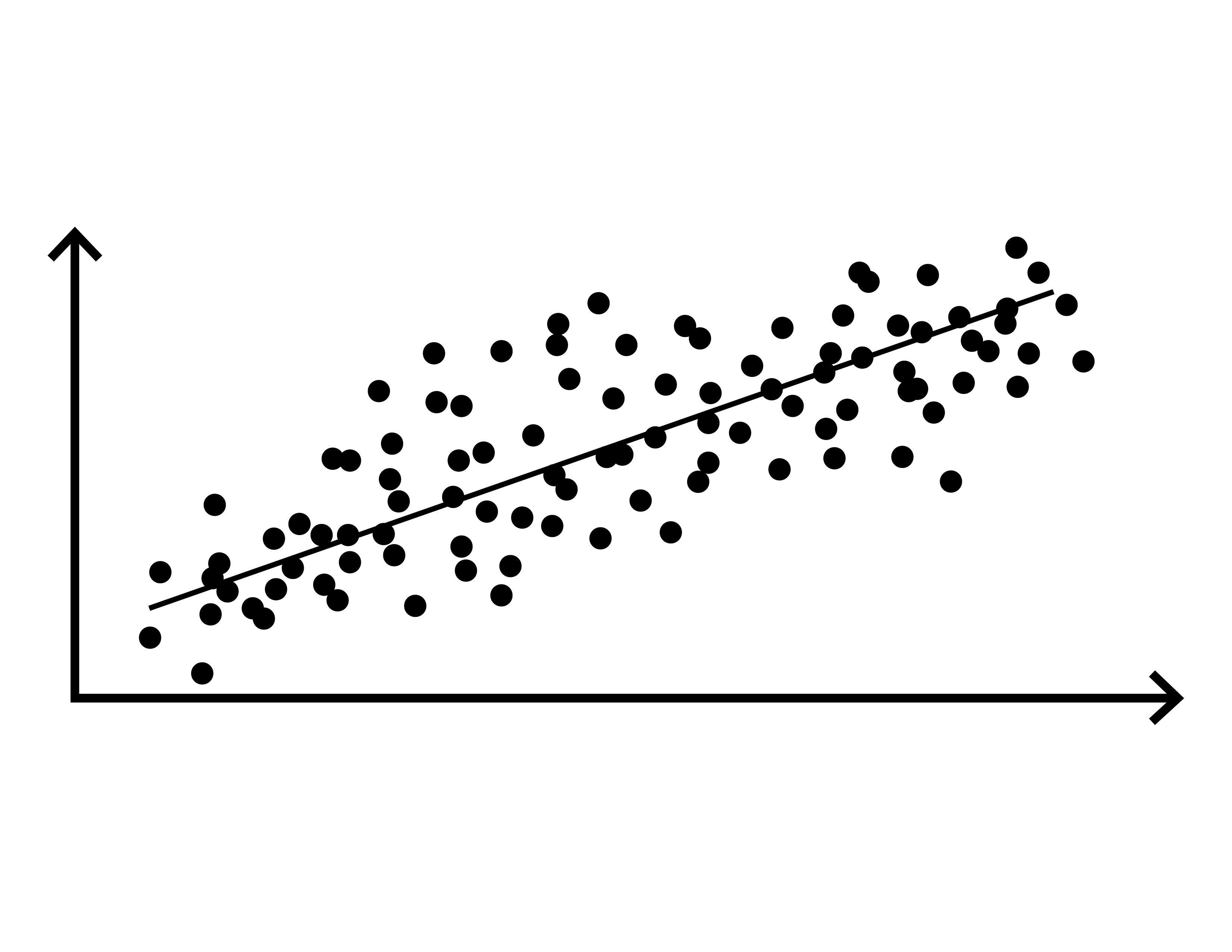

Regression Analysis

Helps us determine how well a dependent variable is explained by the development of one or more independent variables.

Business Analytics

The practice of iterative, methodical exploration of an organization's data, with an emphasis on

statistical analysis, used to drive decision-making and improve performance.

Time Series Analysis

Relies on past data and the data of a certain variable in the past to predict the future

and allows us to quantify the impact of management decisions on the future outcome.

Naïve Time Series

A forecasting method that assumes future values will equal the most recent actual value available,

often used as a benchmark for more complex forecasting methods.

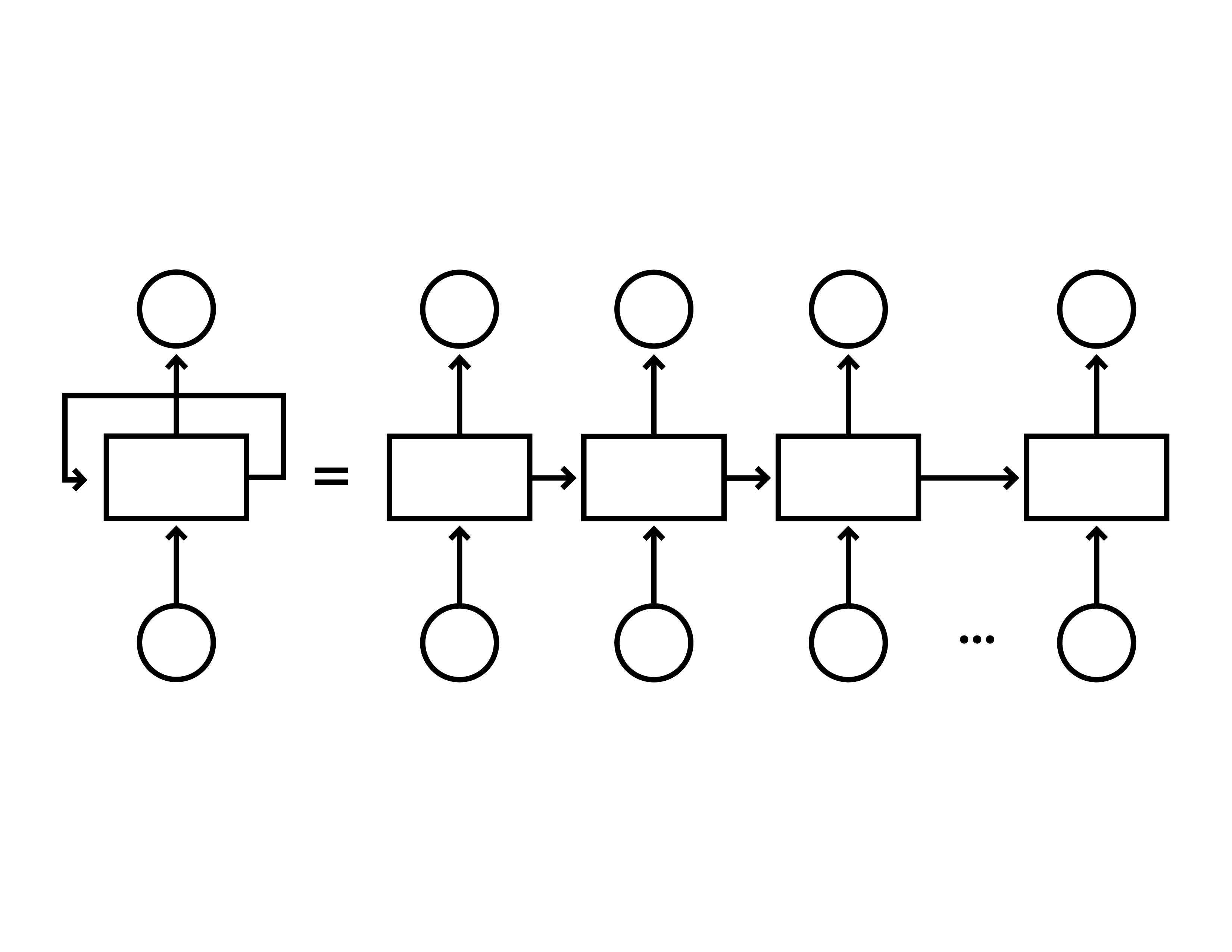

Autoregressive Models

Statistical models for analyzing and forecasting time series data, which use the dependency between an observation and a number of lagged observations.

Moving Average Models

Forecasting models that smooth out short-term fluctuations in time series data by calculating the average of different subsets of the total dataset over time.

Time Series Data

They are usually plotted on a line chart.

Cohort Analysis

A behavioral analytics technique that breaks the data into related groups before analysis, often used to study the behavior of customers over time.

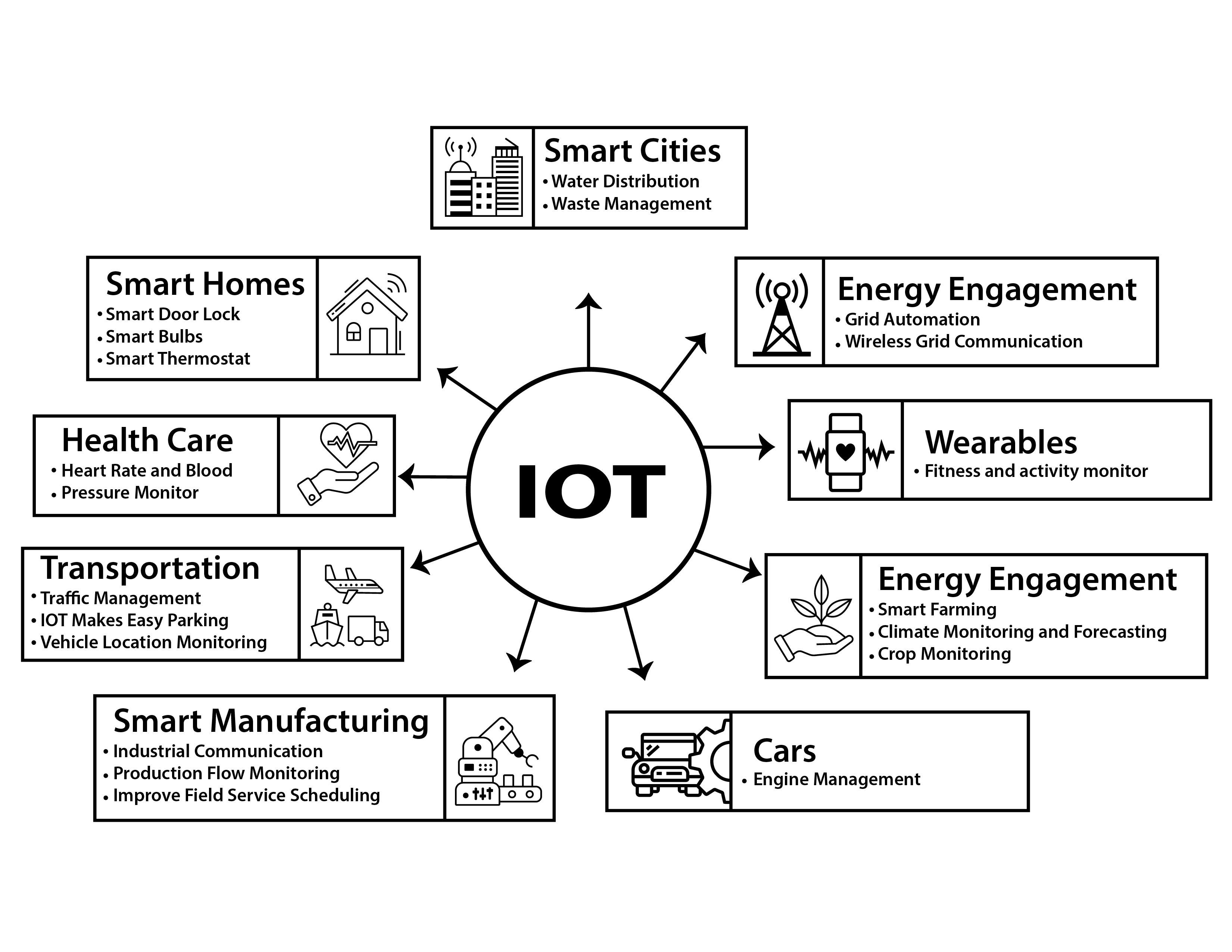

Internet of Things (IoT)

Network of devices connected to the internet, exchanging data.

IoT devices

Devices connected to the internet, including everyday objects, that can collect and exchange data,

enabling more direct integration of the physical world into computer-based systems.

NLP (Natural Language Processing)

A branch of artificial intelligence that helps computers understand, interpret, and manipulate

human language to perform tasks like translation, sentiment analysis, and topic extraction.

Image Recognition

AI capability to identify and interpret images or visual data.

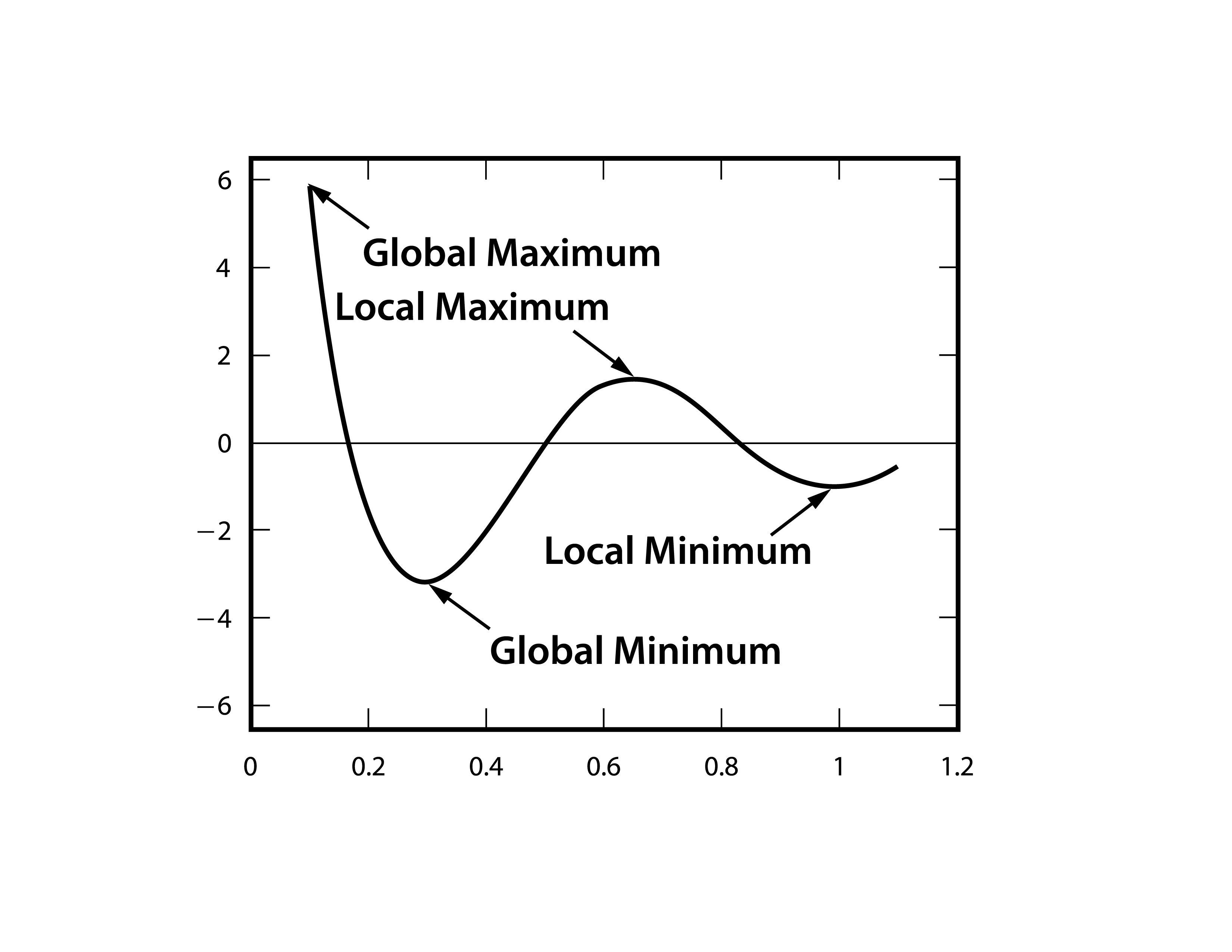

Linear Programming (Optimization)

A math technique used to find an optimal solution to a problem that has several known constraints.

Artificial Neural Networks

Computer programs that are modelled on the human brain and the way we as humans learn.

Artificial Neurons

Computational models inspired by the human brain's neurons, used in neural networks to process and transmit information in artificial intelligence systems.

Neural Network Layers

Artificial neurons are arranged in a series of layers:

Input layer (receives information)

Hidden layers (transform information)

Output layer (uses information)

Deep Learning

A subset of machine learning where ANNs, algorithms inspired by the human brain, learn from large amounts of data.

Deep learning allows machines to solve complex problems even when the dataset is very diverse, unstructured, or interconnected

by performing a task repeatedly, each time tweaking it a little to improve the outcome.

Backpropagation

A method used in artificial neural networks to calculate the gradient of the loss function with respect to the weights, used for training the network.

Reinforcement Learning

A form of machine learning where the optimal behaviour or action is reinforced by a positive reward.

RNNs

Recurrent neuron networks.

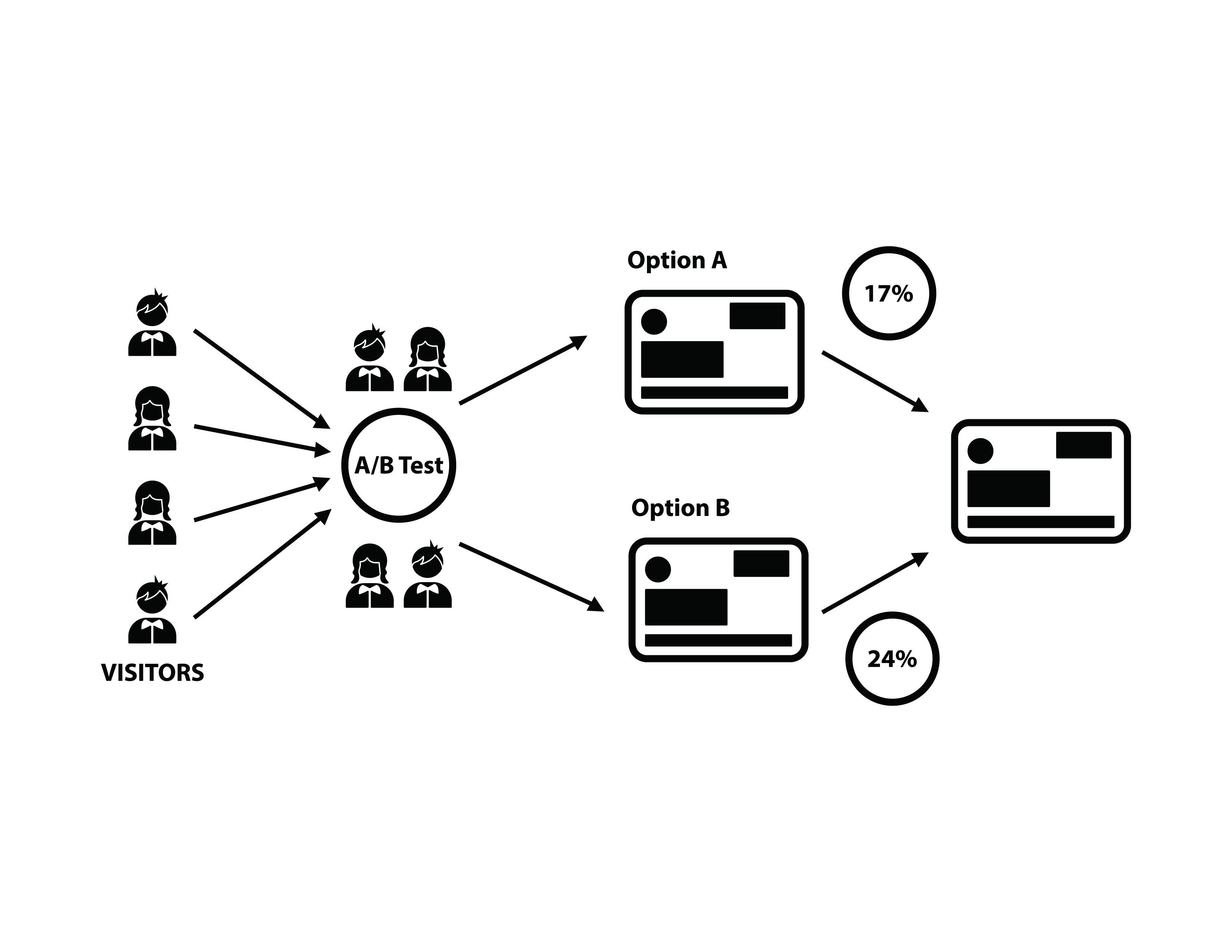

Experimental Design

Applying a change in one part of the organization and comparing it against the other where the change hasn't been applied yet.

A/B Testing

A statistical method of comparing two versions of a webpage or app against each other to determine which one performs better on a given conversion goal.

Maximization Of A Function

The process of finding the highest point or maximum of a function under given constraints, often used in optimization problems to determine the best solution.

Factor Analysis

A collection of techniques used for data reduction and structure detection when working with big datasets and a large number of variables.

Factors

Elements or variables that are considered in data analysis and modeling to understand their impact on outcomes,

used in statistical analyses to identify potential causal relationships.

Streaming Data

Dynamic data that is generated continuously from a variety of sources. Each record needs to preserve its relations to the other data and sequence in time.

Streaming Analytics

When analytics is brought to the data to generate insights while the data is in the 'stream' instead of stored.

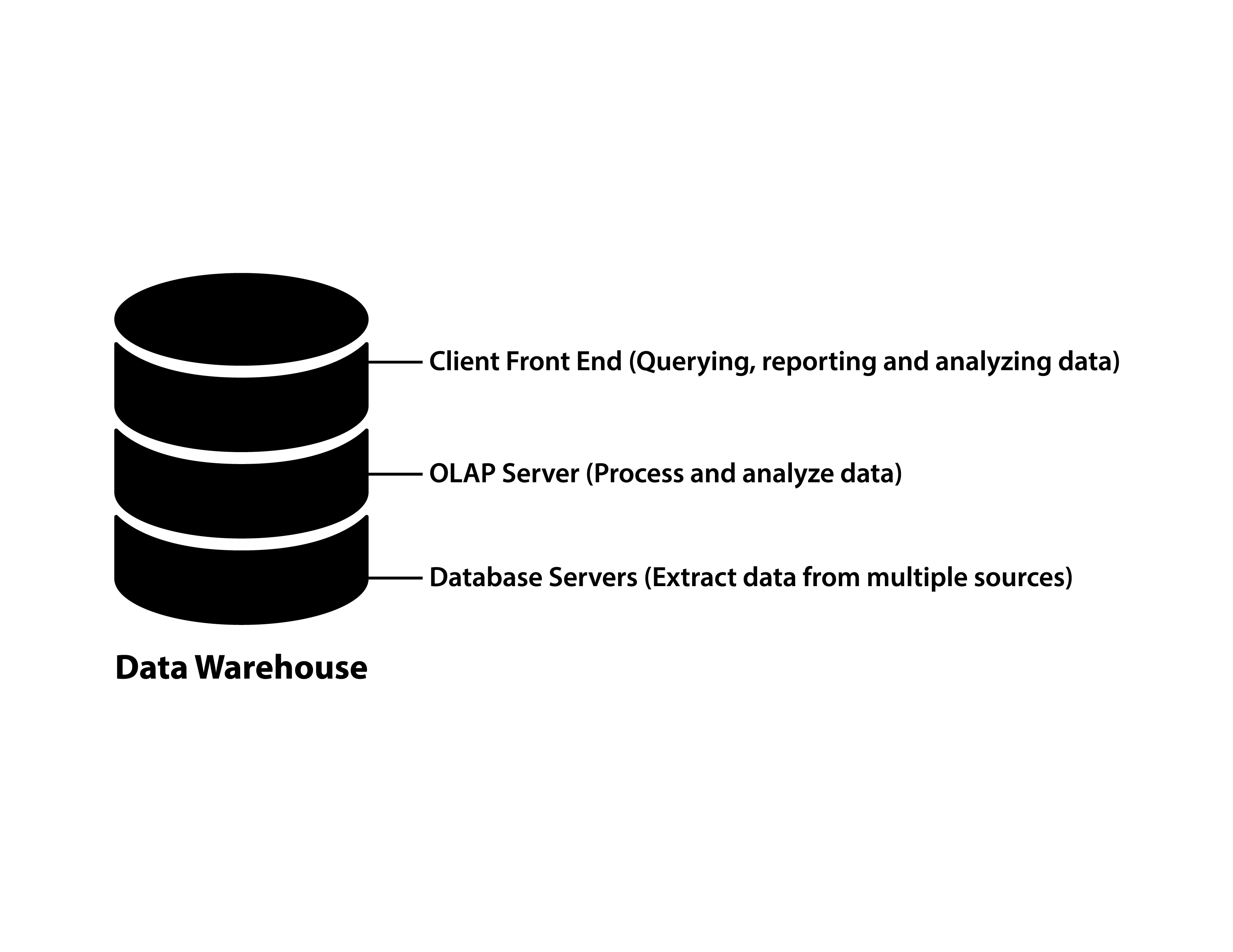

Database

A structured repository that contains structured data in rows and columns.

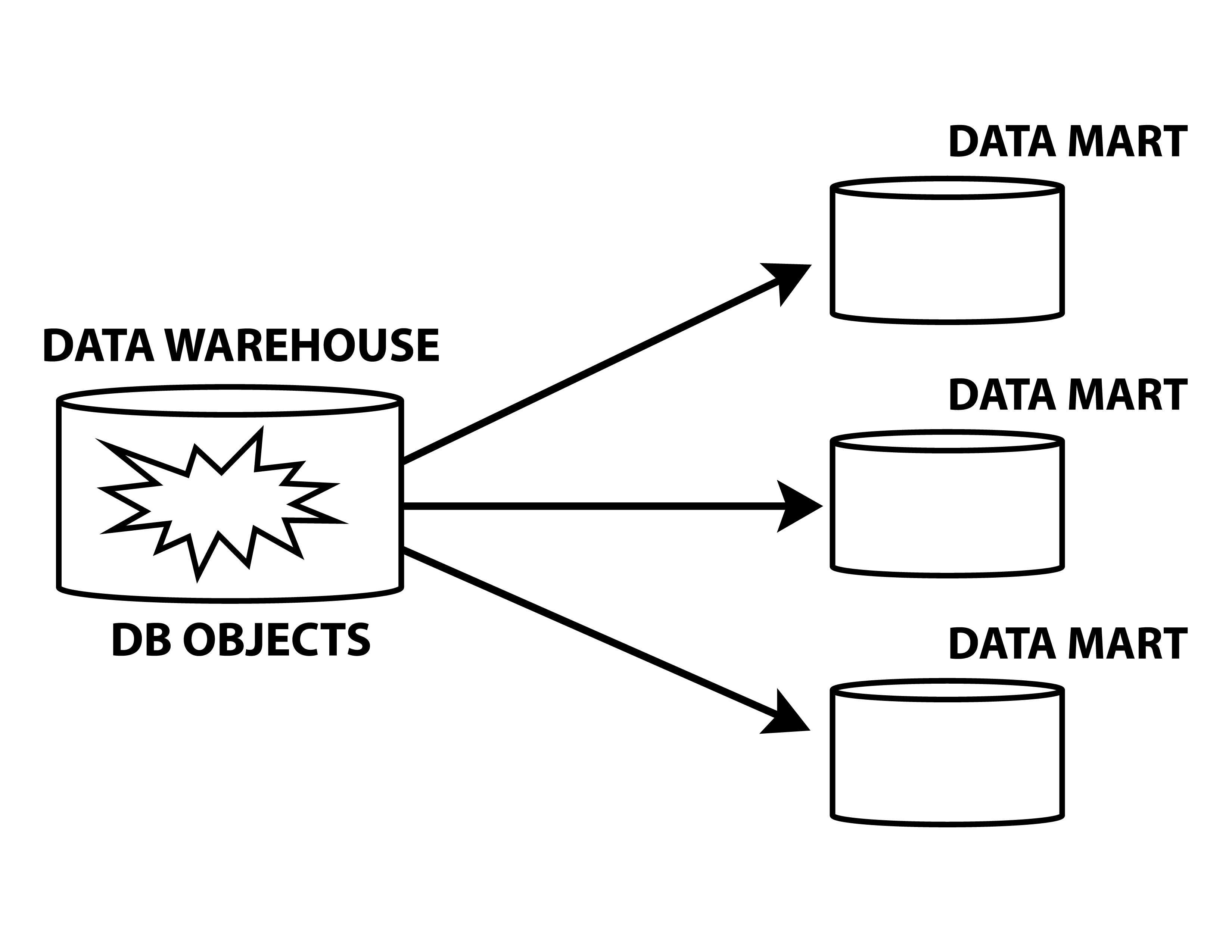

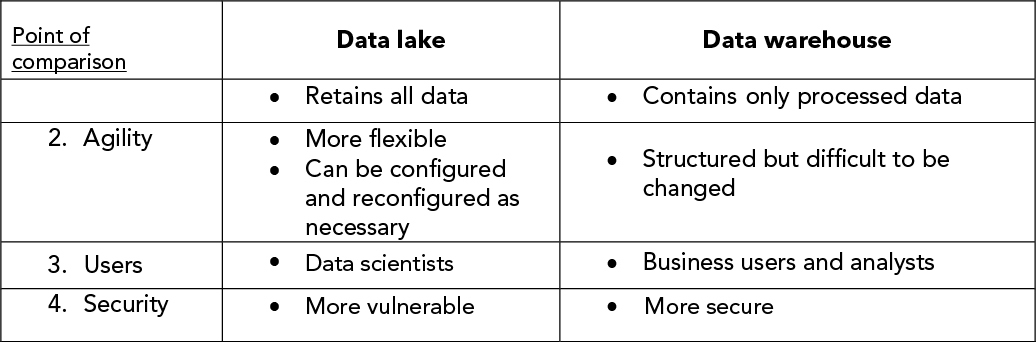

Data Warehouse

A centralized repository for storing, managing, and analyzing large volumes of structured data from various sources within an organization.

Data warehouses are designed to consolidate data into a single, coherent framework.

Data Mart

It contains data that is designed for a particular part of an organisation or a particular purpose.

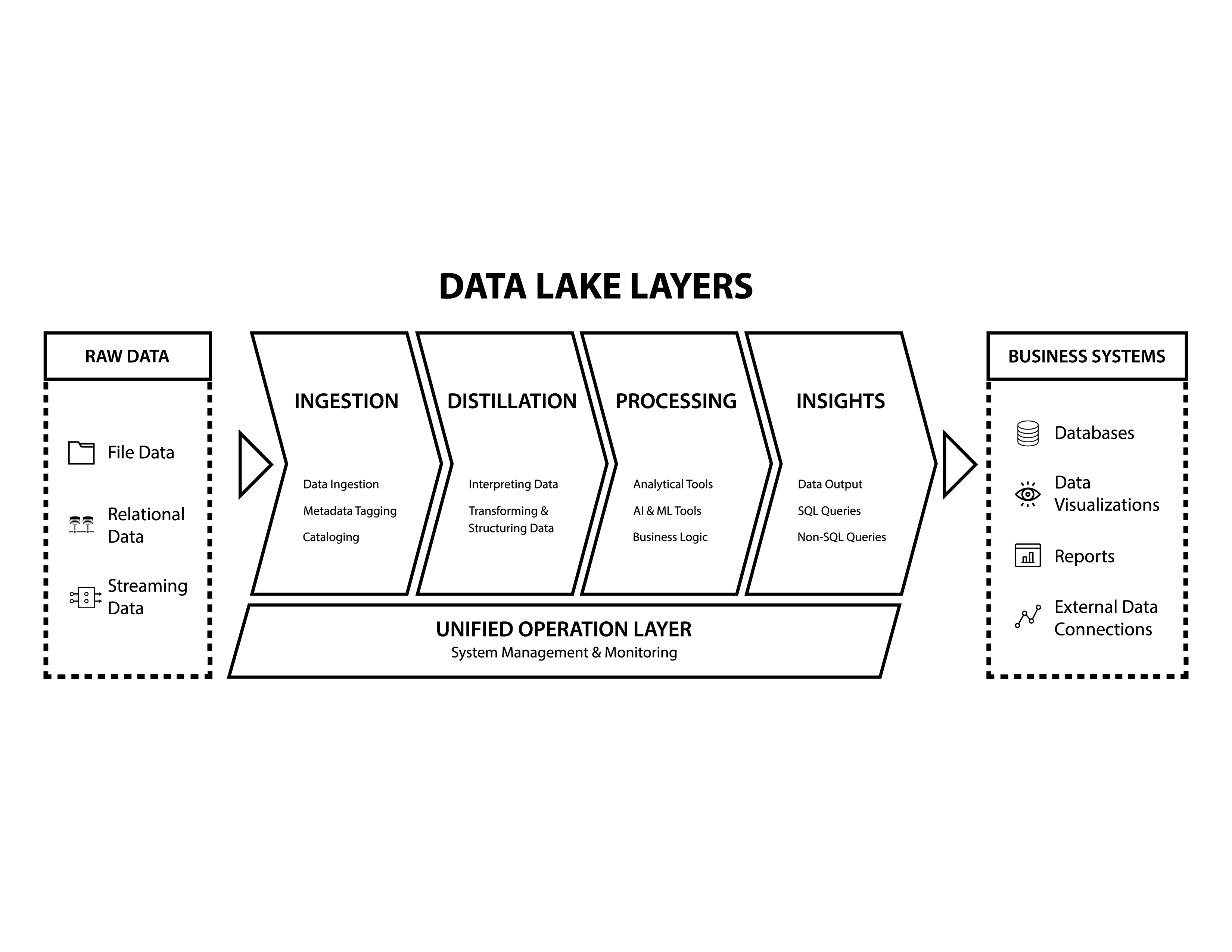

Data Lake

Accepts and retains all data from all data sources, supports all data types and schemas are applied only when data is ready to be used.

Data lake vs Data warehouse

Distribution Storage

'Distributed storage' means using cheap, off-the-shelf components to create high-capacity data storage,

which is controlled by a program that keeps track of where everything is, and finds it upon request.

Big Data

Refers to extremely large data sets that may be analyzed computationally to reveal patterns, trends, and associations.

Big Data as a Service

Democratizes 'big data exploration'. It enables small businesses to take advantage of big data so they can have access to large data sets.

Monte Carlo Simulation

A mathematical problem-solving and risk-assessment technique that approximates the probability

or the risk of a certain outcome using computerized simulations of a random variable.