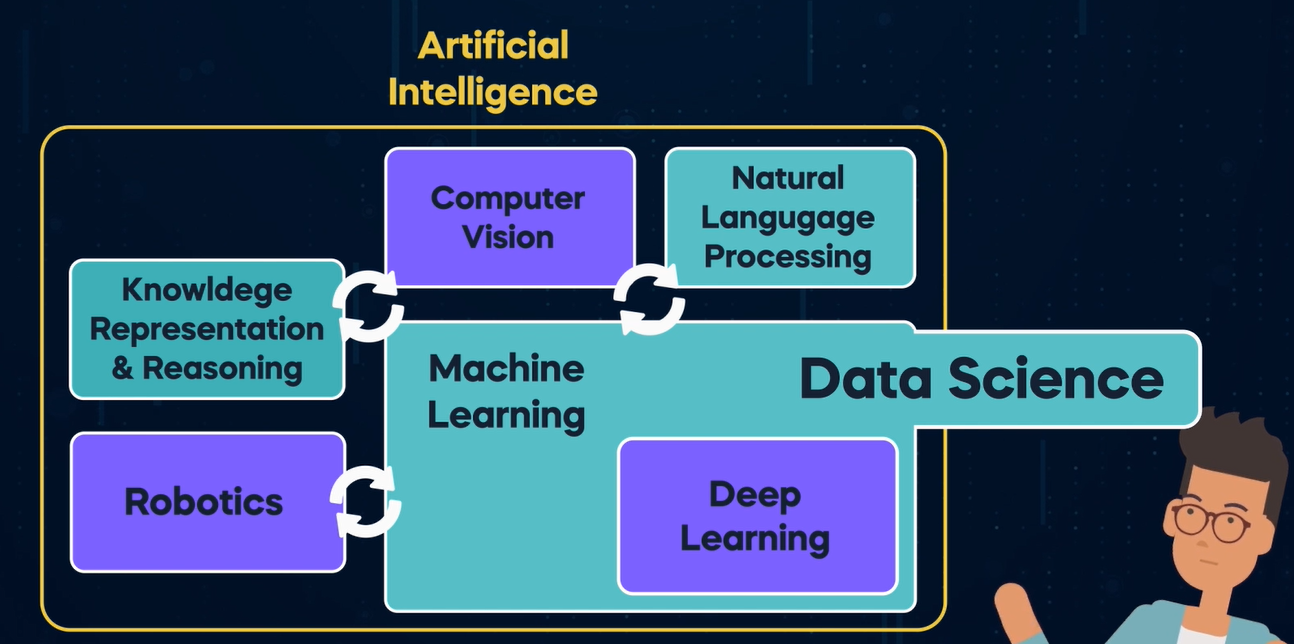

Artificial Intelligence (AI)

Explore the Flashcards:

A field of study inspired by human intelligence that aims to create machines capable of acquiring and applying knowledge and skills.

Machine Learning (ML)

A subfield of AI that uses data to train models and predict outcomes by identifying complex patterns.

Algorithm

A sequence of instructions designed to solve a specific problem, such as predicting user preferences.

Data Science

An interdisciplinary field combining AI, ML, statistics, and visualization to extract actionable insights from data.

Statistical Inference

A method for analyzing data to make conclusions, often without relying on machine learning.

Narrow AI

AI designed for specific tasks, such as movie recommendations or voice recognition.

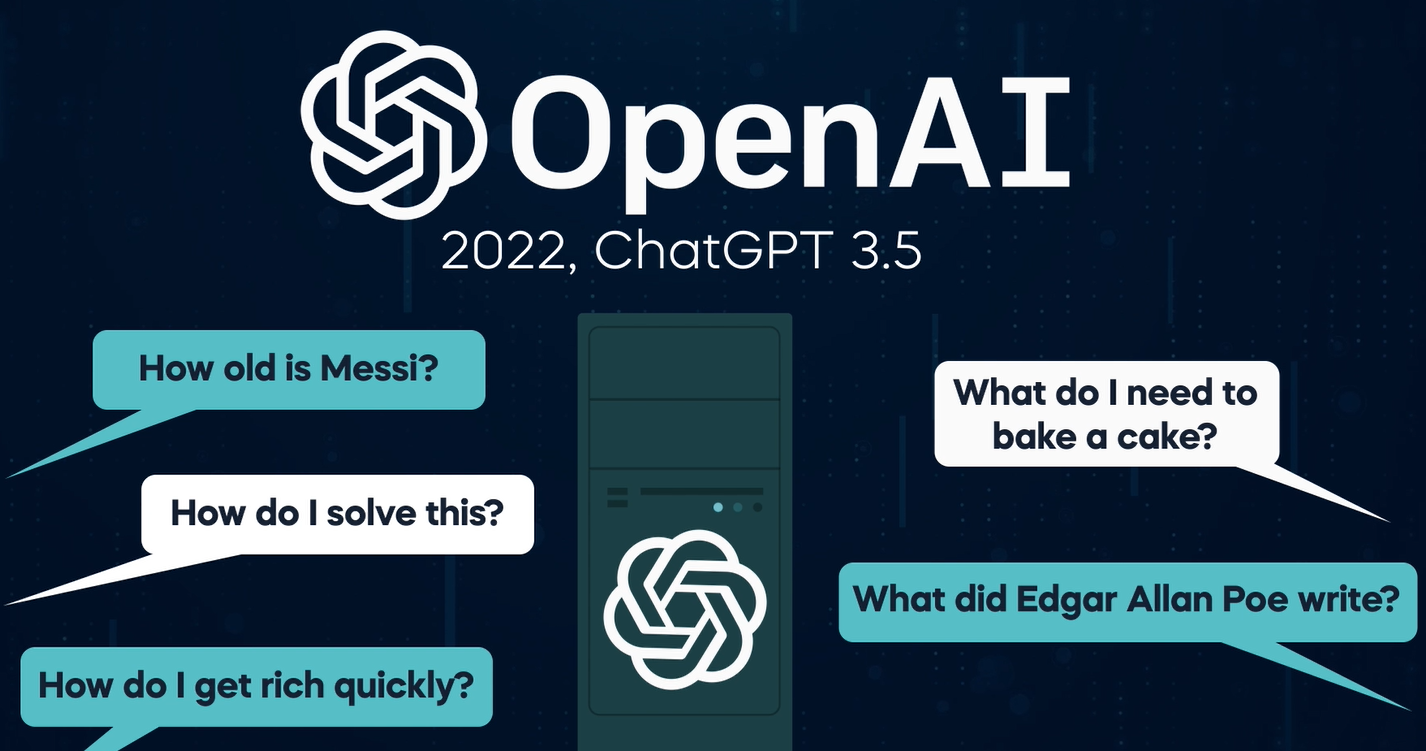

Semi-Strong AI

AI capable of handling diverse tasks and generating human-like responses, such as ChatGPT.

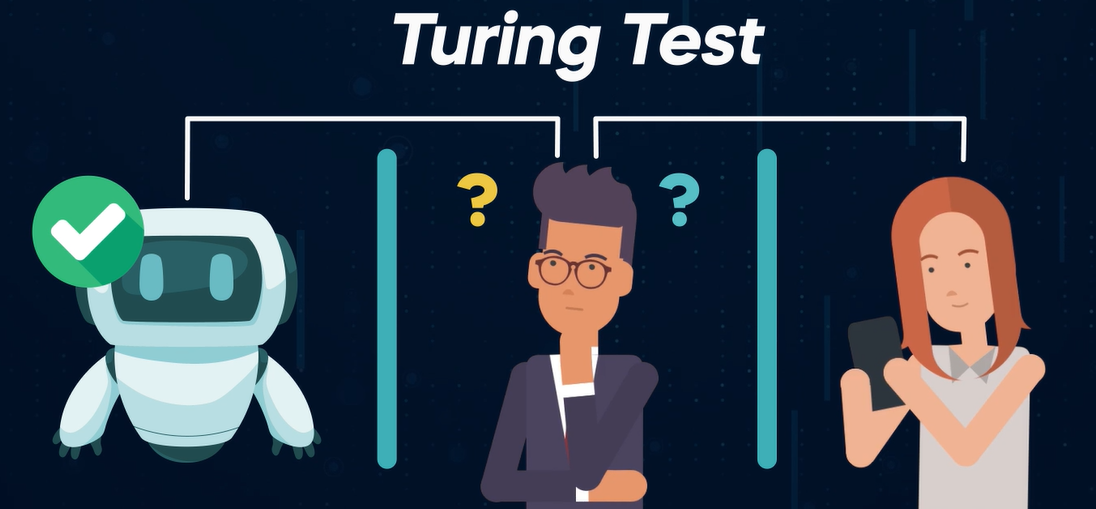

Turing Test

A test proposed by Alan Turing to determine if a machine's responses are indistinguishable from a human’s.

Artificial General Intelligence (AGI)

AI with the ability to outperform humans in various tasks and create new knowledge or discoveries.

Structured Data

Data organized into rows and columns, such as in spreadsheets or databases, making it easy to analyze.

Unstructured Data

Data without a defined structure, like text, images, videos, and audio, which cannot be organized into rows and columns.

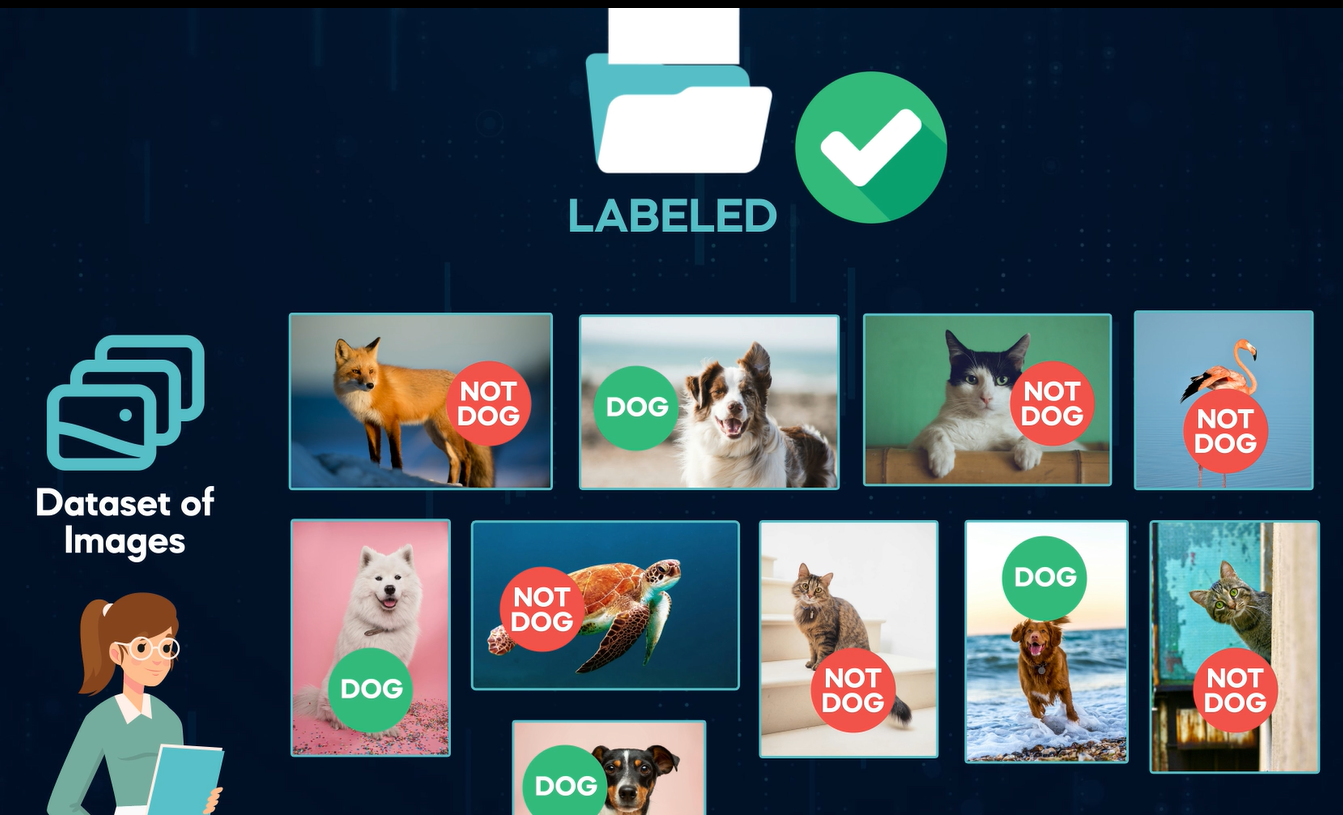

Labeled Data

Data that has been manually tagged or categorized, such as labeling photos as 'dog' or 'not a dog.'

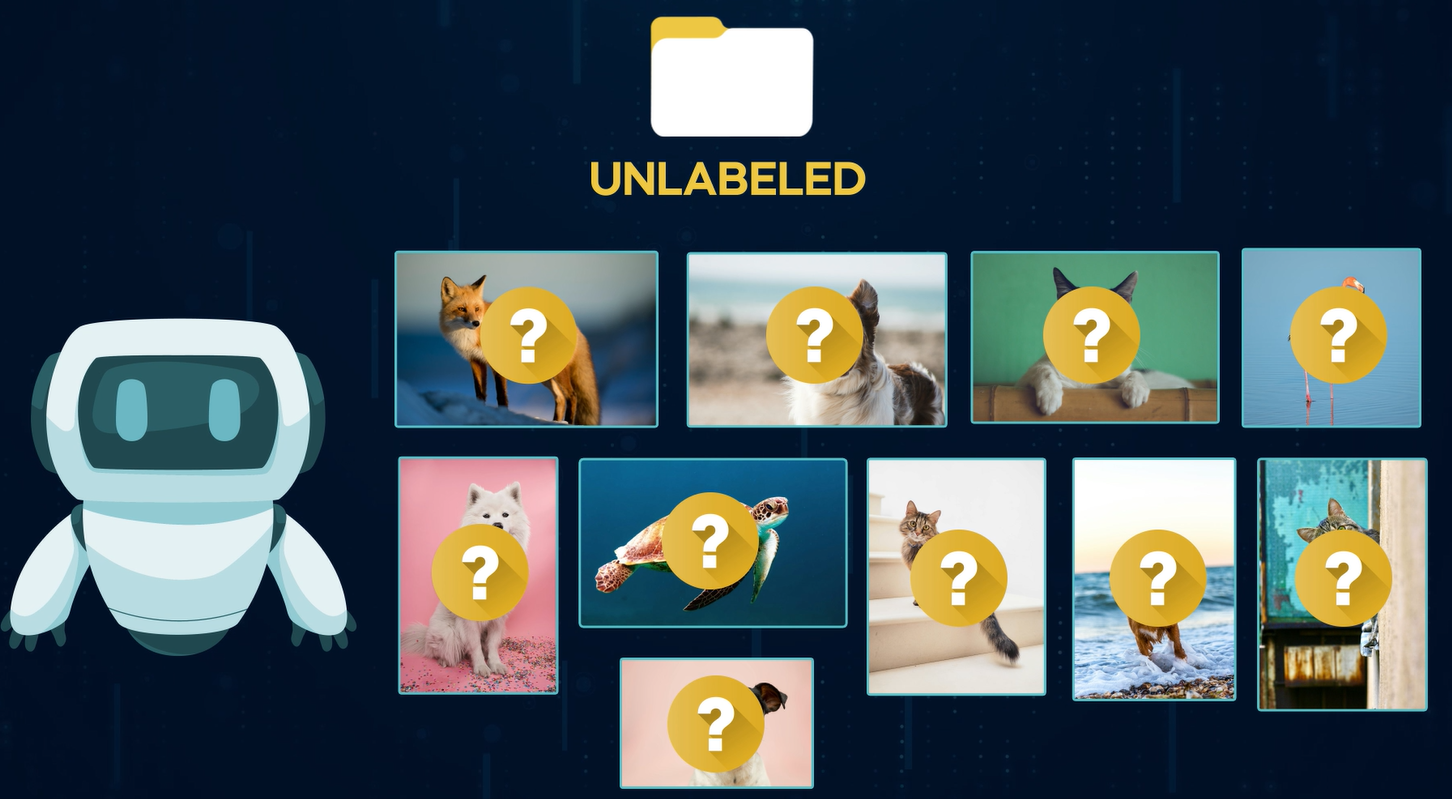

Unlabeled Data

Data without predefined categories or tags, allowing AI models to learn patterns independently.

Manual Labeling

The process of tagging data (e.g., classifying YouTube comments as positive, negative, or neutral) to create labeled datasets.

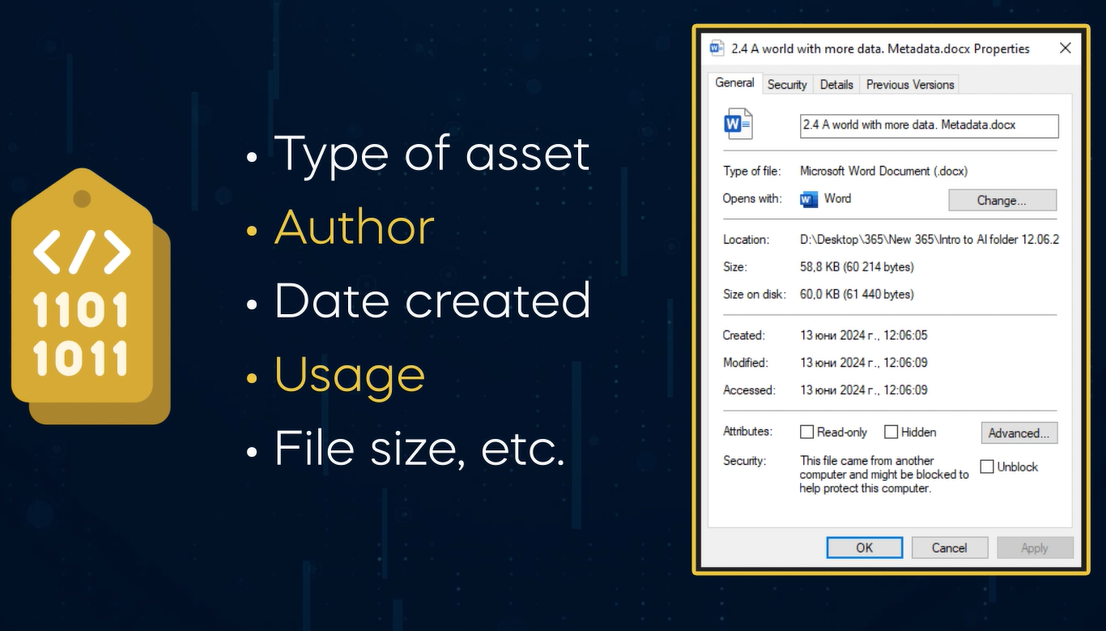

Metadata

Data that describes other data, summarizing key details like file type, author, creation date, and size.

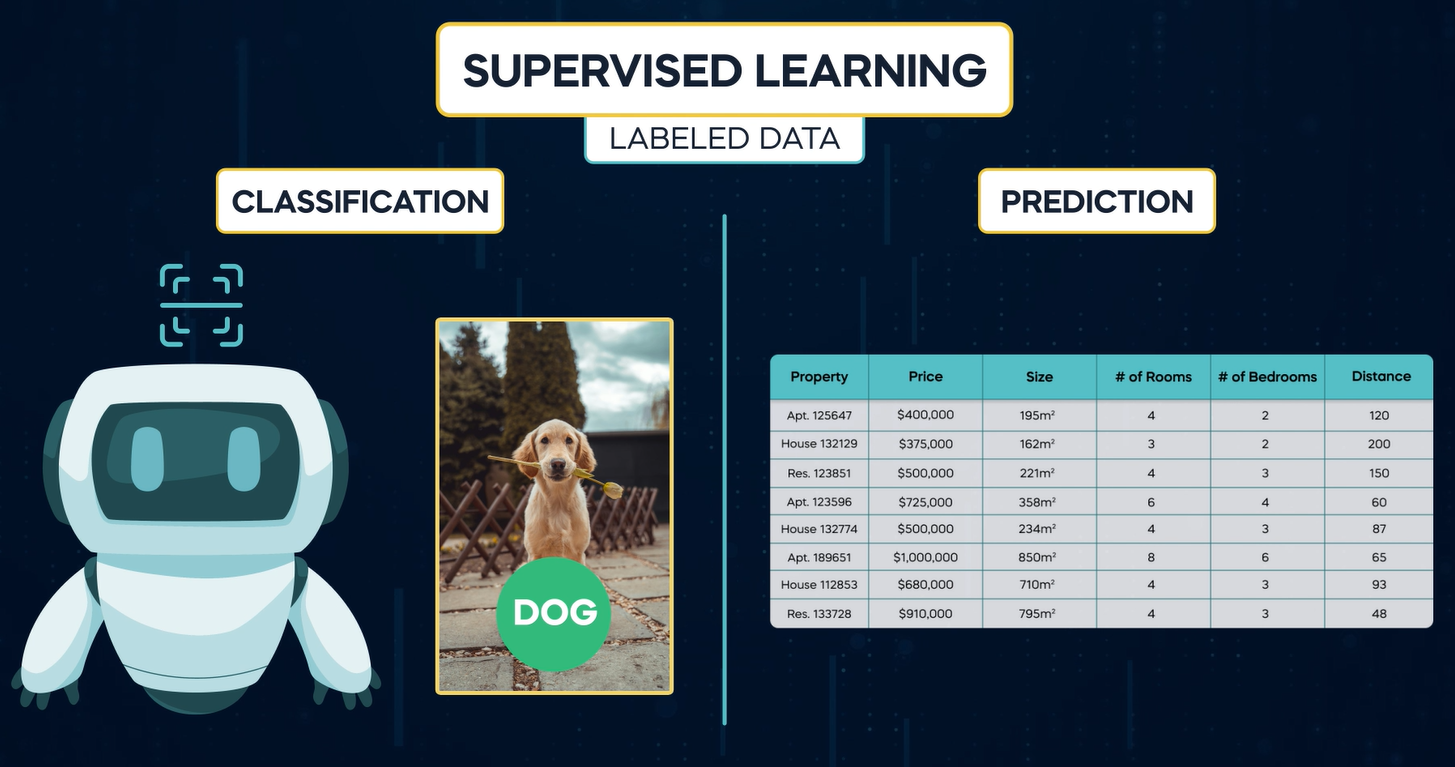

Supervised Learning

ML trained with labeled data to classify or predict outcomes. Example: Predicting home prices based on historical data.

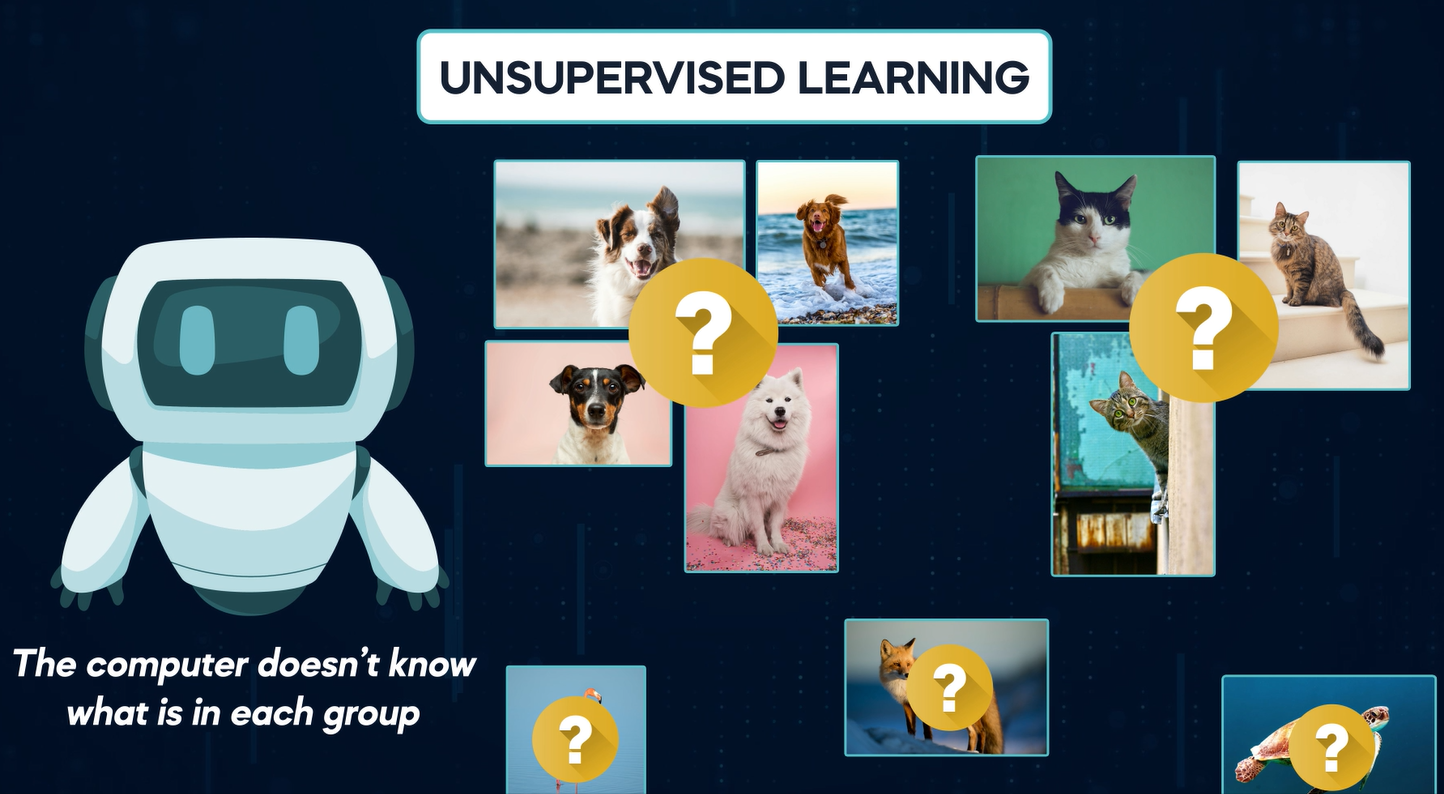

Unsupervised Learning

ML trained with unlabeled data to find patterns or groupings. Example: Grouping customer behaviors in a supermarket.

Reinforcement Learning

ML where models learn by trial and error to achieve a goal, guided by feedback. Example: Netflix recommendations improving with user interaction.

"Garbage In, Garbage Out" (GIGO)

ML performance depends on the quality of training data.

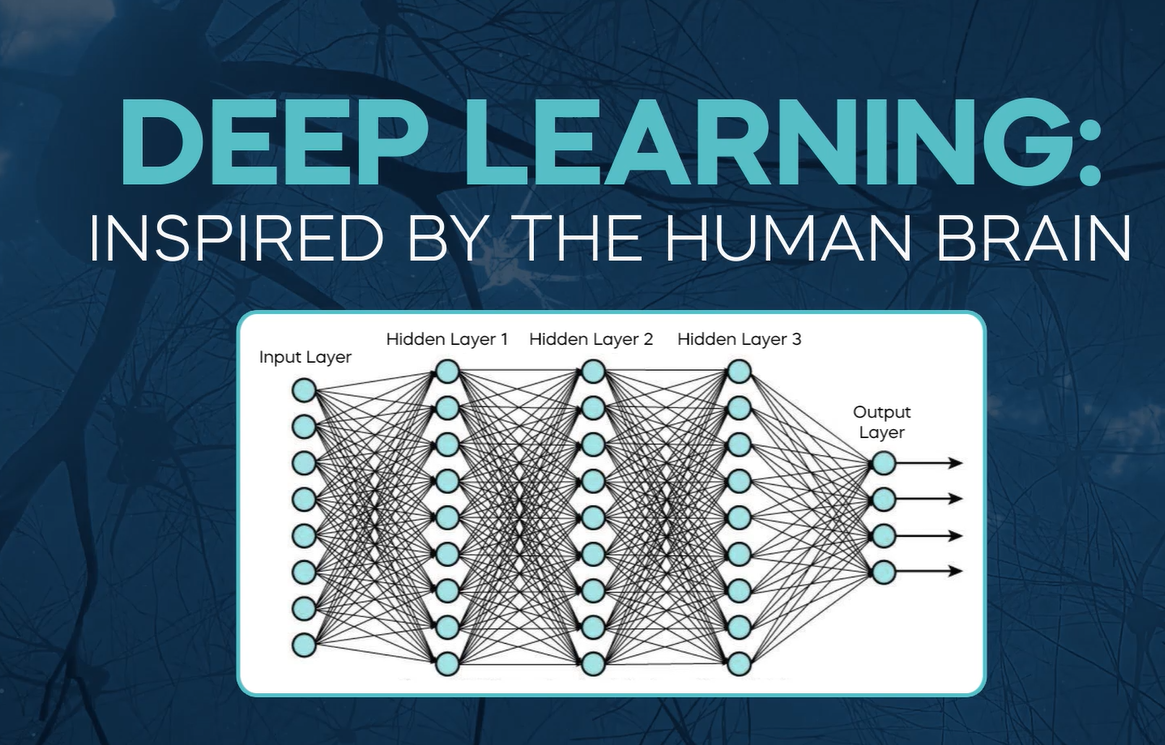

Deep Learning

A subset of ML inspired by the human brain, using artificial neural networks (ANNs) with multiple layers to process and learn complex patterns.

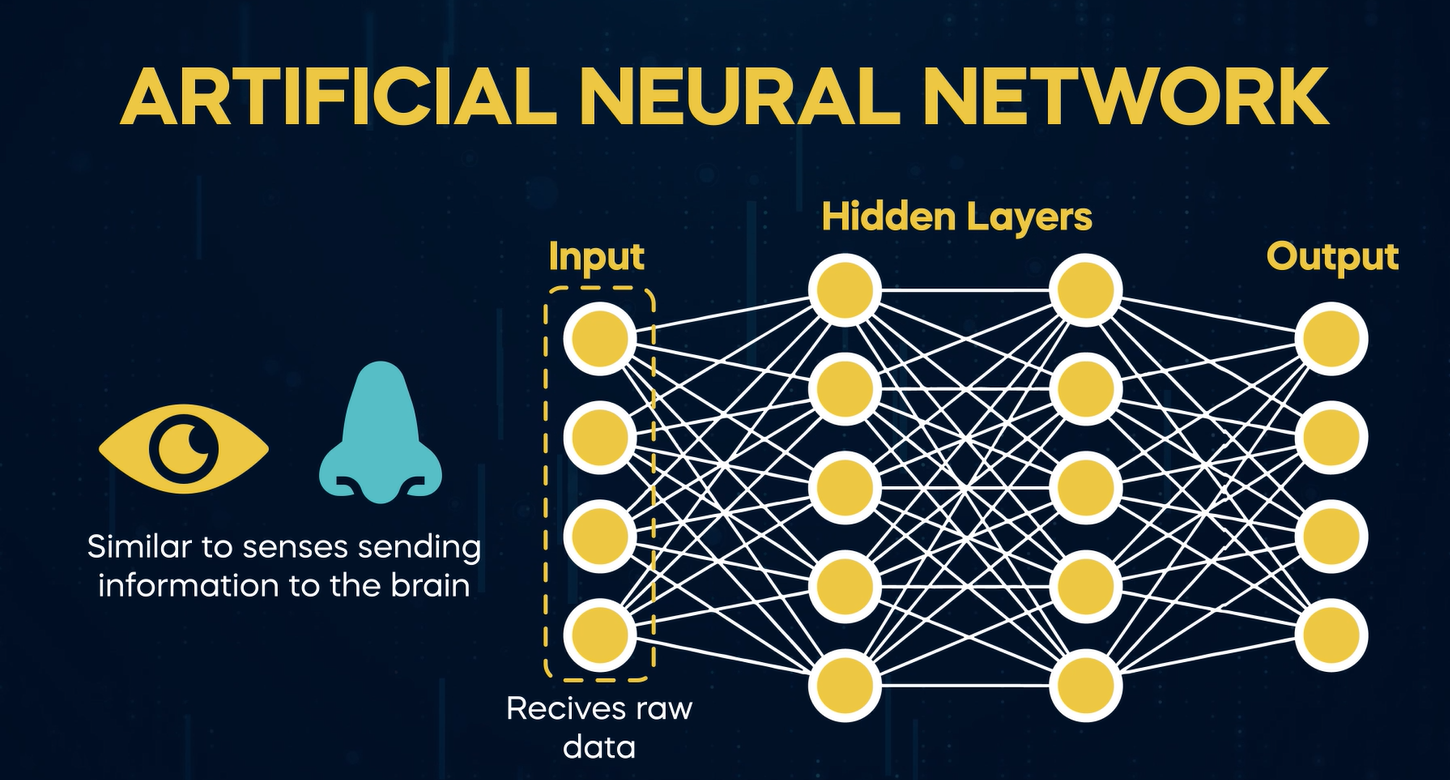

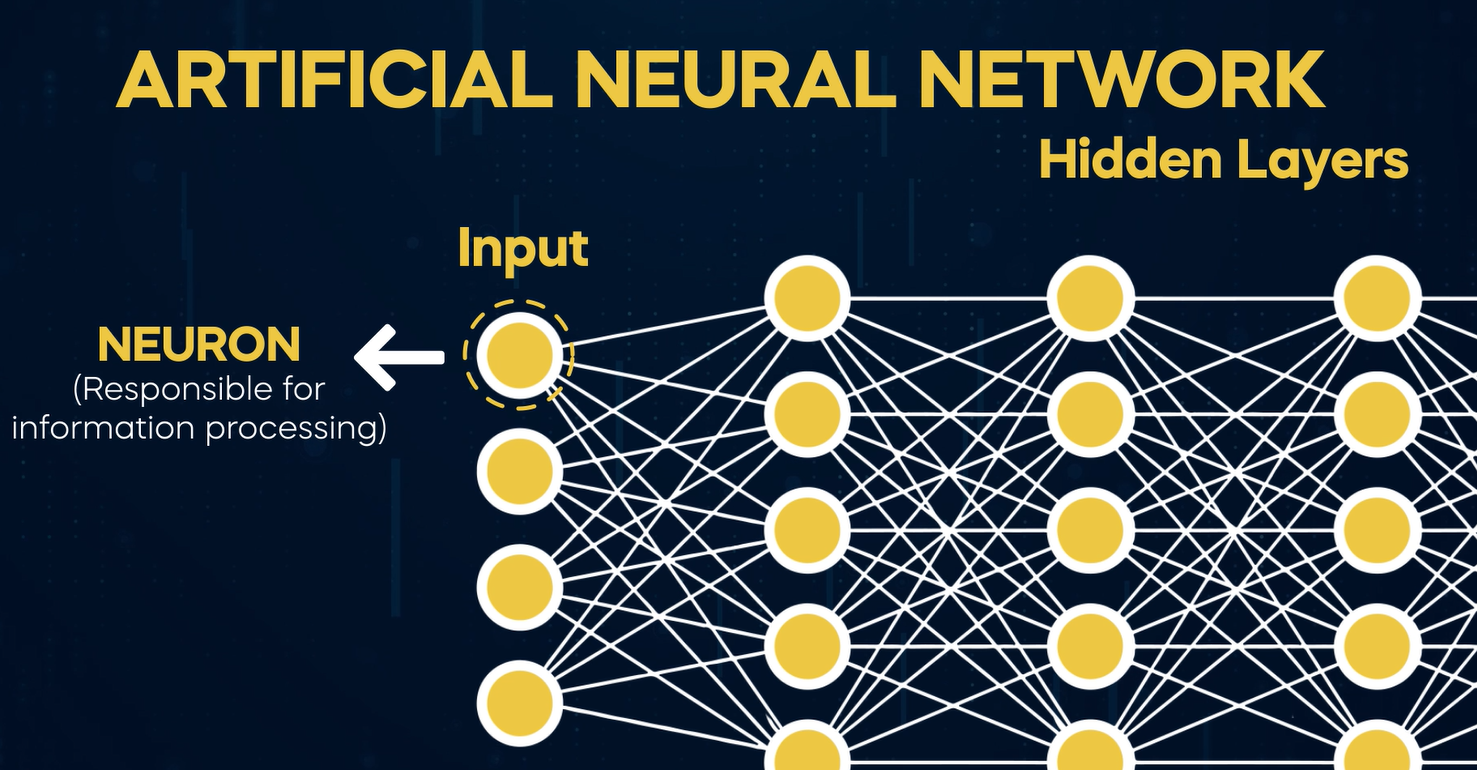

Artificial Neural Network (ANN)

A network with input, hidden, and output layers. Each layer processes data incrementally to form complex patterns.

Input Layer

The first layer of an ANN that receives raw data. Example: Pixel values in an image.

Hidden Layers

Intermediate layers in an ANN that process and transform input data. More layers allow for learning complex features.

Output Layer

The final layer in an ANN that generates the result, such as identifying a digit in an image.

Neurons (Nodes)

Units in each ANN layer responsible for processing information via weights, biases, and activations.

Activation

The value a node holds, representing the strength of its output. Higher values indicate stronger signals.

Weights and Biases

Mathematical factors used in ANN layers to adjust learning and refine predictions.

Robotics

The interdisciplinary field involving the design, construction, and operation of robots to perform tasks autonomously or with human-like capabilities.

Computer Vision

An AI field teaching machines to interpret and analyze visual information from images and videos.

Convolutional Neural Networks (CNNs)

Specialized neural networks designed for image and video processing, identifying patterns and features in layers.

Generative Adversarial Networks (GANs)

AI models using two competing networks to create and refine realistic images, videos, or text.

Generative AI

AI systems capable of creating new content, such as text, images, videos, audio, or 3D models.

Language Model

An AI system trained to predict the next word in a sentence based on context.

Large Language Models (LLMs)

Neural networks trained on extensive text datasets to generate human-like text, like ChatGPT.

Diffusion Models

AI models that generate images or videos by refining random noise into detailed visuals, as used by DALL-E.

Hybrid Models

Generative AI approaches that combine multiple techniques, such as LLMs and GANs, for enhanced content generation.

Natural Language Processing (NLP)

A field of computer science focused on enabling computers to understand, interpret, and generate human language.

Rule-Based NLP (1950s)

Early systems used grammar rules to process text but were limited by their manual nature.

Statistical NLP (1980s-90s)

Introduced probabilistic methods for understanding language by analyzing patterns and word frequencies.

Vector Embeddings

Numerical representations of words or sentences in high-dimensional space, capturing relationships and meanings.

Neural Networks in NLP

Deep learning models that identify complex patterns for tasks like translation and text generation.

Masked Language Models

Predict missing words regardless of their position in a sentence. Example: “Water (blank) at 0 degrees.”

Autoregressive Models

Predict the next word in a sequence based on preceding words. Example: GPT models.

LLM Scalability

Larger training datasets and more parameters lead to increasingly versatile and intelligent models.

N-Gram Models

Estimate word probabilities based on preceding n-1 words, lacking context for subtle nuances in language.

Recurrent Neural Networks (RNNs)

Neural networks that retain sequential information, improving context understanding but struggling with long text sequences.

Long Short-Term Memory (LSTM)

Improved RNNs using gate architectures to retain relevant information over longer sequences.

Transformers

A breakthrough architecture focusing attention on relevant words or tokens, improving scalability and handling long-range dependencies.

Attention Mechanism

Allows transformers to assign importance scores to words, enabling models to focus on crucial parts of input sequences.

Model Design

Selecting neural network architecture, depth, and parameters to define the model’s capabilities.

Dataset Engineering

Gathering, cleaning, and structuring data for training while addressing ethical concerns like bias and diversity.

Pretraining

Training models on large datasets to create a raw version capable of general tasks.

Post-Training

Includes supervised finetuning and incorporating human feedback to refine the model's performance.

Final Testing

Evaluating the model’s accuracy, speed, and ethical behavior to ensure readiness for end-user deployment.

Prompt Engineering

Guiding models using instructions and examples without modifying their weights.

Retrieval-Augmented Generation (RAG)

Attaching a database to the model for expanded context without altering weights, improving contextual learning.

Fine-Tuning

Training an existing model with additional data to update its weights, making it more specialized or efficient.

Foundation Models

Massive AI models trained on diverse data, enabling general-purpose capabilities across various domains.

Multi-Modal Models

Foundation models that process multiple data types, such as text, images, and videos, extending beyond traditional language tasks.

Hallucinations

When AI generates false or inaccurate responses due to errors in prediction or training data.

Inconsistencies

When AI produces varying answers to the same question, often due to hardware or hosting differences.

Mitigation Strategies

Using prompts like "Only respond if you know the answer" or optimizing hardware allocation for consistent outputs.

Data Scarcity

As LLMs process most publicly available data, new and unique training content becomes harder to source.

API (Application Programming Interface)

A bridge enabling communication between a client (e.g., app) and a server to request and retrieve data.

Vector Databases

Store and organize unstructured data (e.g., text, images) as numerical embeddings in high-dimensional spaces.

Similarity Search

Indexes vectors to group similar data, enabling fast retrieval and contextual searches (e.g., YouTube video recommendations).

Long-Term Memory

Vector databases give LLMs memory by storing past interactions, enhancing contextual understanding in chatbots and assistants.

Open-Source AI

Community-driven, freely accessible AI models that foster collaboration and innovation (e.g., Meta’s Llama).

Closed-Source AI

Proprietary models offering plug-and-play convenience, robust APIs, customer support, and stronger data security.

Hugging Face

A platform for sharing ML models, datasets, and applications, often called the GitHub of Machine Learning.

Transformers Library

Simplifies access to pre-trained models and facilitates creating ML pipelines.

LangChain

An open-source framework for integrating multiple AI models and external data sources into apps using modular components.

AI as a Judge

Using one AI model to evaluate the output of another for tasks like coding or open-ended questions.

AI Strategist

A professional aligning AI projects with business strategies to maximize impact and ensure effective implementation.

AI Developer

Builds foundational AI models, requiring advanced technical and research skills.

AI Engineer

Integrates foundation models into products, optimizing performance with techniques like fine-tuning and RAG.