Machine Learning for Natural Disaster Relief

Every year, natural disasters affect approximately 160 million people worldwide.

With more than 400 natural disasters for 2019 alone, relief agencies and governments are struggling to turn these overwhelming amounts of data into actionable insights.

Fortunately, the latest developments in machine learning and artificial intelligence enable researchers, engineers, and scientists to explore and analyze various data sources more efficiently than ever before.

So how can machine learning improve disaster relief efforts?

Appsilon Data Science came up with an innovative solution.

As an entry to the xView2 competition organized by the Defense Innovation Unit at United States Department of Defense, the company created machine learning models to assess structural damage by analyzing before-and-after satellite images of natural disasters. They used PyTorch to build their models and fast.ai to develop their critical parts.

Appsilon’s Machine Learning for Natural Disaster Relief: How does it work?

The Appsilon Data Science Machine Learning team built ML models using the xBD dataset. The dataset comprises data across 8 disaster types, 15 countries, and thousands of square kilometers of imagery. The goal of the ML models was to assess damage to infrastructure to help alleviate human labor and decrease the time for planning an adequate response.

The models not only achieve high accuracy but also boast an intuitive user interface that enables everyone to benefit from its capabilities. The interface was developed and implemented in Shiny using Appsilon’s own shiny.semantic open source package.

Appsilon’s Machine Learning for Natural Disaster Relief: What are the Models' Technical Specifics?

The Damage Assessment App

Appsilon Data Science implemented their models in a Shiny app that allows users to explore the impact of four real-life natural disasters by running their model on built-in scenarios – Hurricane Florence, Santa Rosa Wildfire, the Midwest Flooding, and the Palu Tsunami.

The latter resulted from the September 2018 earthquake in Indonesia and caused considerable property damage in the area of the capital Palu.

The Dataset

The xBD dataset consists of satellite imagery data for several regions recently harmed by natural disasters. The dataset is quite varied, as it comprises a wide range of affected locations: from remote forests, through industrial areas with large buildings to high-density urban landscapes.

The main challenges the team faced were the diverse locations and building sizes, as well as the variety of disasters.

Apart from the ability to localize the buildings, the model also had to assess structural damage. That meant using one approach for areas destroyed by a fire, and a different one for such destroyed by flood. The disaster types in the dataset included volcanic eruptions, hurricanes, disastrous floods, tsunamis, raging wildfires, tornado damage, and bushfires. Saving response planners endless hours of searching through thousands of images or conducting face-to-face surveys allows them to focus the limited resources on taking proper action and, consequently, saving more lives.In terms of imagery, the availability of ‘before’ and ‘after’ images of affected areas turned out to be crucial for speeding up humanitarian response.

The Machine Learning Pipeline

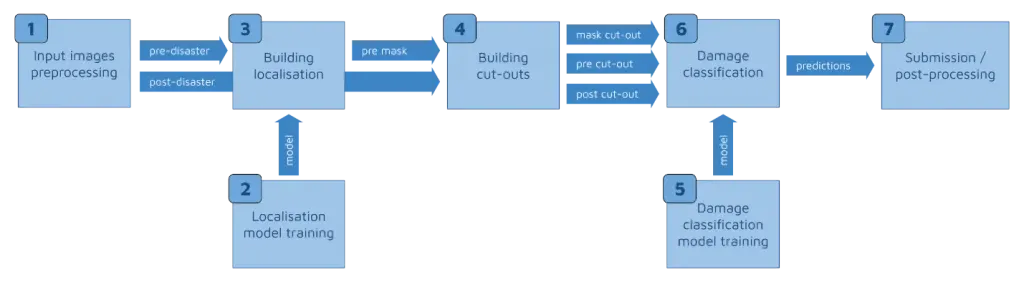

Developing a high-accuracy ML model for satellite imagery analysis requires two components: building localization and damage assessment. Although a single model can do both, Appsilon Data Science decided to build a separate model for each task.

This turned out to be quite challenging, as ‘each model requires a separate training dataset with the same preprocessing schedule’. Preprocessing involves changing the color histograms of the images, cropping, padding, and flipping, among other data augmentation methods. Naturally, the team struggled with managing such a large number of moving parts. Moreover, performing inference on slightly different parameters than the parameters used for model training would result in a very low score.

So how did Appsilon avoid this risk?

By employing an appropriate ML pipeline. This way, the training process remained completely reproducible and, at the same time, efficient. To accomplish that, they based the pipeline on their internal framework. Since it memorized the results of all the steps, they could to reuse them. Besides, it automatically ran the computation in case any hyperparameters or dependencies changed. All of this resulted in a much faster and mistake-free development process.

Here are the specific steps:

But it wasn’t just the ML pipeline, that enabled the team to deliver an accurate model. Two other techniques also helped them achieve that within a limited timeframe - transfer learning for localization and 7-channel input for classification.

But it wasn’t just the ML pipeline, that enabled the team to deliver an accurate model. Two other techniques also helped them achieve that within a limited timeframe - transfer learning for localization and 7-channel input for classification.

Transfer learning for localization

Appsilon chose one of the best models developed for a SpaceNet competition. Then, they used transfer learning to apply it to building damage assessment in natural disaster response.

Localization is of major importance to the model accuracy. After all, the model can’t classify damage to a building, unless it finds it first. In a team member’s own words, ‘the better the localization model, the higher the potential score for damage assessment’.

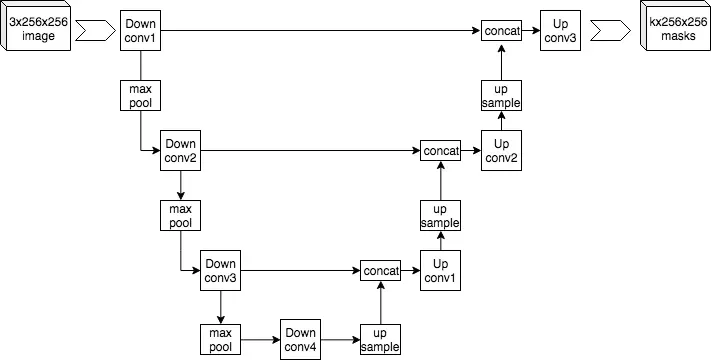

The team used neural networks based on the UNet architecture, as they are suitable for solving such segmentation problems.

UNet architectures first encode the image to a smaller resolution representation; then decode it back to create a segmentation mask. Still, the very process of developing appropriate weights for the segmentation exercise can take a lot of time and effort.

Fortunately, localization of buildings on images is a well-examined issue. So, to build their model, the Appsilon team took existing, cutting-edge ML localization solutions developed through a series of SpaceNet challenges. Specifically, they used XDXD pre-trained weights created for one of the top SpaceNet4 solutions, built using a VGG-16 encoder.

UNet architecture example by Mehrdad Yazdani – Own work, CC BY-SA 4.0, Creative Commons

Once the model with pre-existing weights was ready, they continued training it on the xView2 data. Thus, they improved the accuracy, while spending much less time on computation compared to building a model from zero.

7-Channel input for classification

Appsilon further accelerated the training of the damage assessment model by employing a 7th channel for identifying the building location in an image.

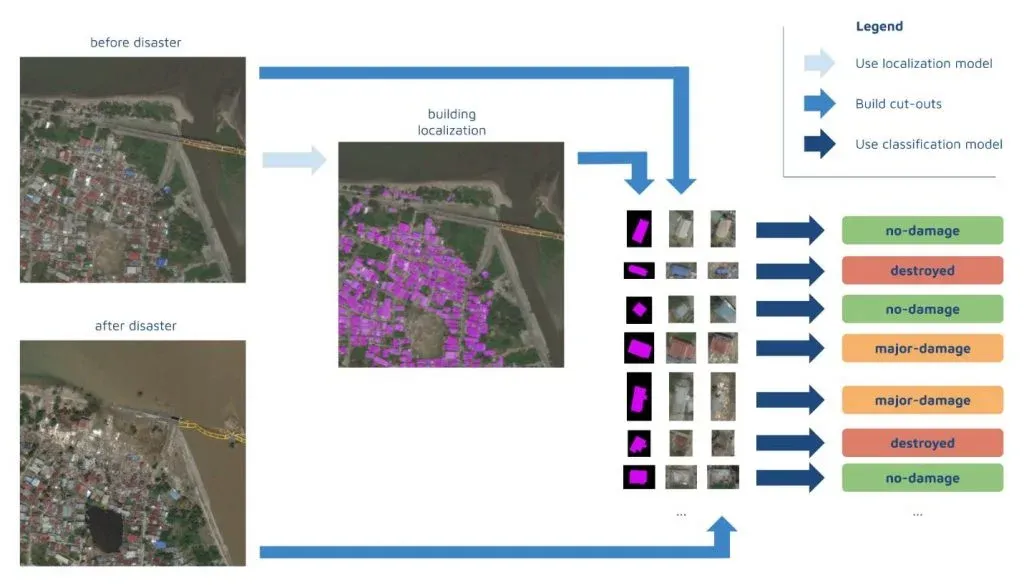

The localization model enabled them to identify individual buildings’ locations. How?

They cut each building out of the bigger image along with a small portion of the surrounding area.

That was necessary because the damage score for a building sometimes depended on its surroundings. For example, a building with an undamaged roof was still scored to have sustained significant damage, as it was fully surrounded by floodwater. This means Appsilon Data Science had two cutouts for each building, where its state pre- and post-disaster were depicted (each had 3 channels: RGB).

In addition, they used a 7th channel - a mask highlighting the location of the building within the cutout. The 7th channel allows the classification model to quickly identify the important part of the image – the building itself. It’s true that such an addition increases the size of the model and could slow make the inference process a bit slower. But, even so, it actually accelerates the training of the model because it learns faster where to focus its attention. And, at the end of the day, that results in better accuracy.

Machine Learning for Natural Disaster Relief: How Can You Contribute?

We believe that Appsilon Data Science machine learning solution for disaster relief is a great example of how the data science community can help solve one of the most serious issues in our day. As part of their AI for Good initiative, the company reaches out to the tech community from around the world willing to use their expertise to support those working on the front line of natural disaster management and response to empower them with the latest cutting-edge solutions.

Master the Machine Learning Process A-Z to be able to contribute to this and other meaningful projects.