Credit Risk Modeling in Python

bestseller

Blend credit risk modeling skills with Python programming: Learn how to estimate a bank’s loan portfolio's expected loss

Start for Free

Start for Free

What you get:

- 8 hours of content

- 80 Interactive exercises

- 119 Downloadable resources

- World-class instructor

- Closed captions

- Q&A support

- Future course updates

- Course exam

- Certificate of achievement

Credit Risk Modeling in Python

bestseller

Start for Free

Start for Free

What you get:

- 8 hours of content

- 80 Interactive exercises

- 119 Downloadable resources

- World-class instructor

- Closed captions

- Q&A support

- Future course updates

- Course exam

- Certificate of achievement

$99.00

Lifetime access

Start for Free

Start for Free

What you get:

- 8 hours of content

- 80 Interactive exercises

- 119 Downloadable resources

- World-class instructor

- Closed captions

- Q&A support

- Future course updates

- Course exam

- Certificate of achievement

What You Learn

- Expand your business acumen with deep understanding of retail banking processes and identifying key value drivers

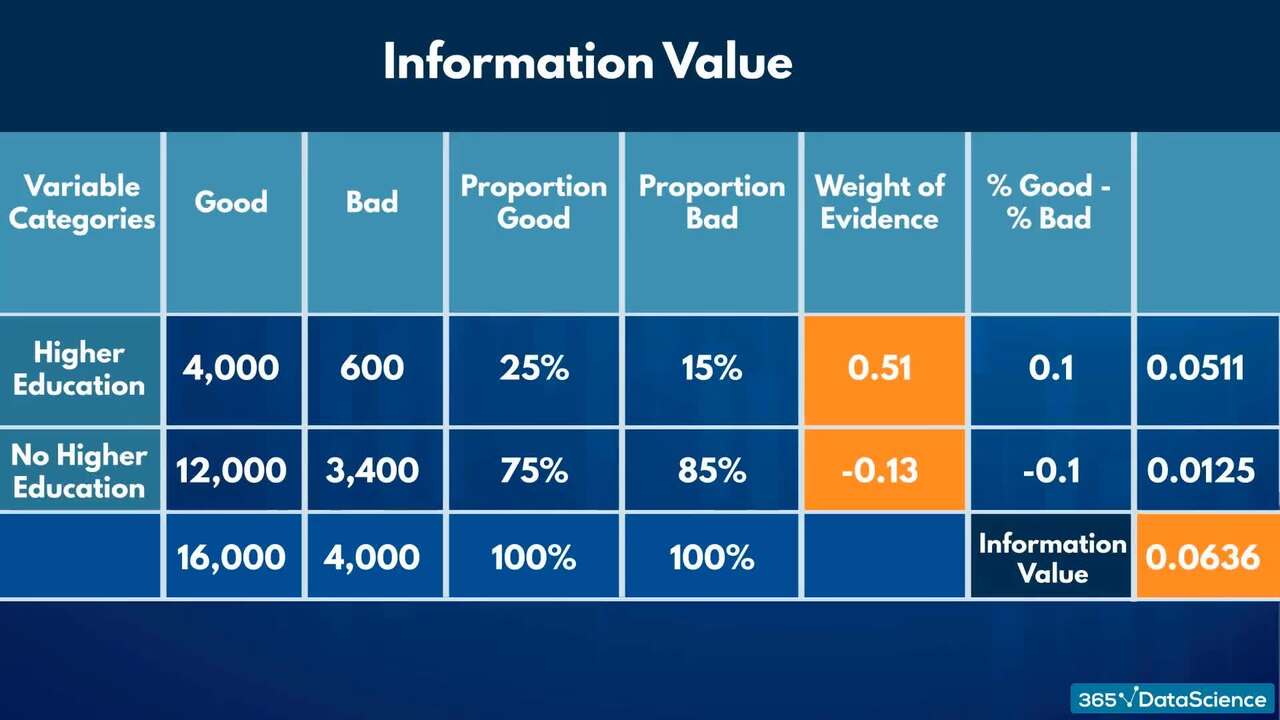

- Gain comprehensive credit risk modeling knowledge, including concepts such as Basel II, probability of default (PD), loss given default (LGD), and exposure at default (EAD)

- Apply logistic regression in Python to predict credit risk

- Boost your data pre-processing skills by cleaning real-life loan portfolio data

- Acquire specialized credit risk modeling skills and differentiate your data scientist resume

- Secure a competitive edge over other candidates when applying for retail banking data scientist roles

Top Choice of Leading Companies Worldwide

Industry leaders and professionals globally rely on this top-rated course to enhance their skills.

Course Description

Learn for Free

1.1 What does the course cover

5 min

1.2 What is credit risk and why is it important?

5 min

1.3 Expected loss (EL) and its components: PD, LGD and EAD

4 min

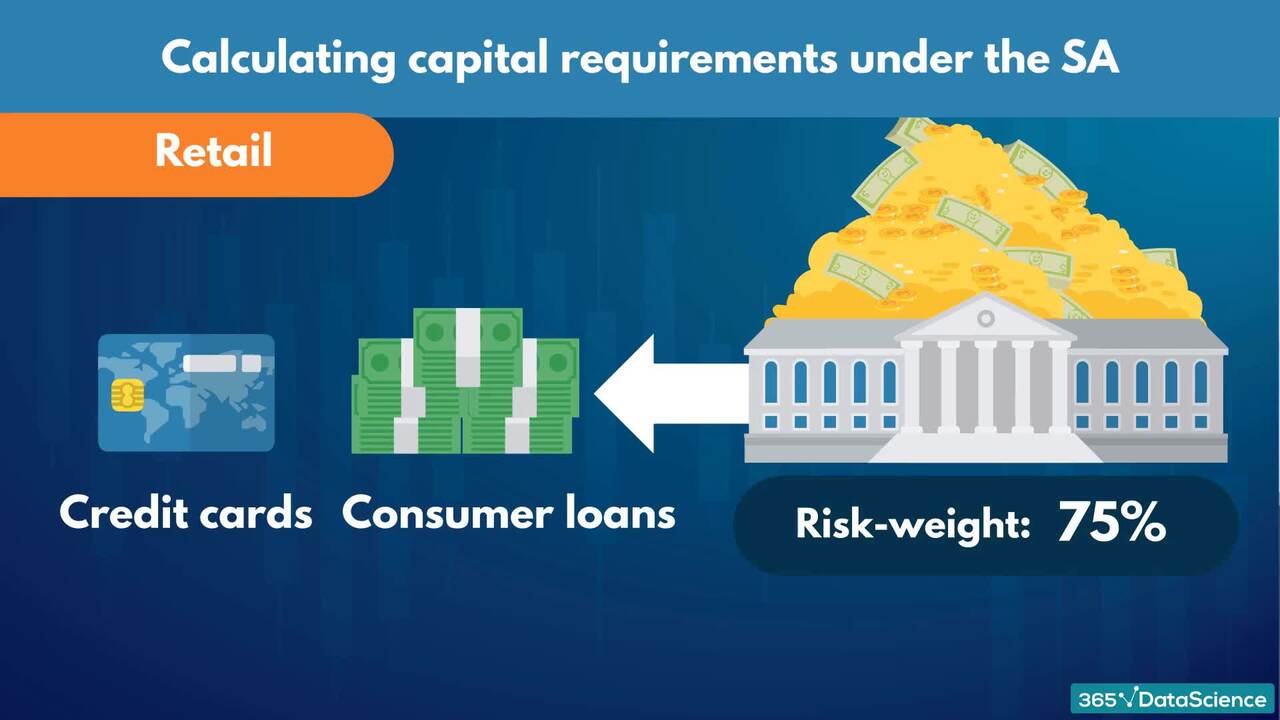

1.4 Capital adequacy, regulations, and the Basel II accord

5 min

1.5 Basel II approaches: SA, F-IRB, and A-IRB

10 min

1.6 Different facility types (asset classes) and credit risk modeling approaches

9 min

Curriculum

Topics

Course Requirements

- You need to complete an introduction to Python before taking this course

- Basic skills in statistics, probability, and linear algebra are required

- It is highly recommended to take the Machine Learning in Python course first

- You will need to install the Anaconda package, which includes Jupyter Notebook

Who Should Take This Course?

Level of difficulty: Advanced

- Aspiring data scientists

- Current data scientists who are passionate about acquiring domain-specific knowledge in credit risk modeling

Exams and Certification

A 365 Data Science Course Certificate is an excellent addition to your LinkedIn profile—demonstrating your expertise and willingness to go the extra mile to accomplish your goals.

Meet Your Instructor

Nikolay is a Director of Data Science and Automation at KBC Group. He has a solid background in marketing analytics, risk modeling, and research. A Master’s degree in Science and a Ph.D. in Economics and Business Administration have given Nikolay vast experience in the academic world. He spent over six years in the field of research at HEC Paris, BI Norwegian Business School, and the University of Texas at Austin, U.S. In addition, Nikolay has worked on numerous projects for Coca-Cola Hellenic and Shawbrook Bank (UK) that involved building highly accurate quantitative models and solutions for customer portfolio management, credit risk, social media marketing research, and psychological targeting.

What Our Learners Say

365 Data Science Is Featured at

Our top-rated courses are trusted by business worldwide.