Machine Learning with Ridge and Lasso Regression

Master regularization with ridge and lasso regression: from theoretical foundations to practical applications

Start for Free

Start for Free

What you get:

- 1 hour of content

- 20 Interactive exercises

- 7 Downloadable resources

- World-class instructor

- Closed captions

- Q&A support

- Future course updates

- Course exam

- Certificate of achievement

Machine Learning with Ridge and Lasso Regression

Start for Free

Start for Free

What you get:

- 1 hour of content

- 20 Interactive exercises

- 7 Downloadable resources

- World-class instructor

- Closed captions

- Q&A support

- Future course updates

- Course exam

- Certificate of achievement

$99.00

Lifetime access

Start for Free

Start for Free

What you get:

- 1 hour of content

- 20 Interactive exercises

- 7 Downloadable resources

- World-class instructor

- Closed captions

- Q&A support

- Future course updates

- Course exam

- Certificate of achievement

What You Learn

- Master ridge and lasso regression to elevate your data analysis skills to the next level

- Gain a deep understanding of ridge and lasso regularization and how they can be applied to solve real-world problems

- Understand the strengths and limitations of ridge and lasso regression and master their use to prevent overfitting

- Explore the key differences between ridge and lasso regression and learn how to choose the right method for your use case

- Integrate essential math concepts with hands-on Python programming skills

- Develop the skills to independently plan, execute, and deliver a complete ML project from start to finish

Top Choice of Leading Companies Worldwide

Industry leaders and professionals globally rely on this top-rated course to enhance their skills.

Course Description

Learn for Free

1.1 What does the course cover?

5 min

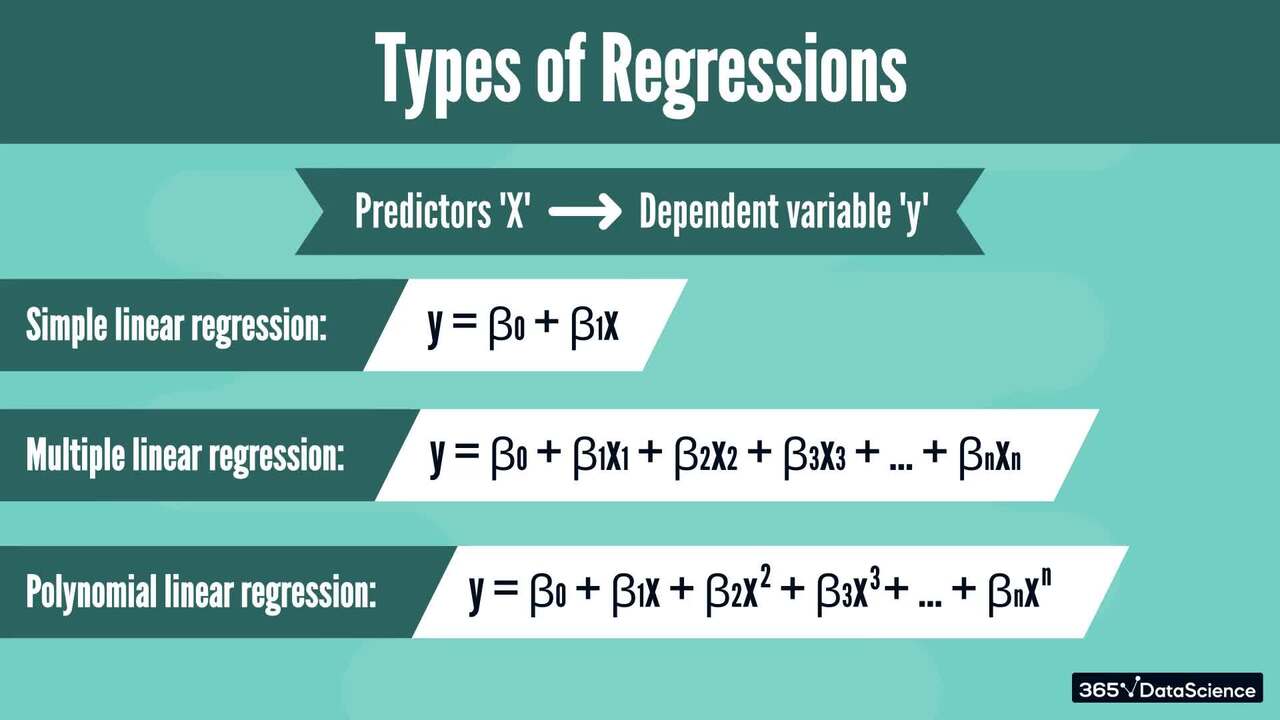

1.2 Regression Analysis Overview

3 min

1.3 Overfitting and Multicollinearity

3 min

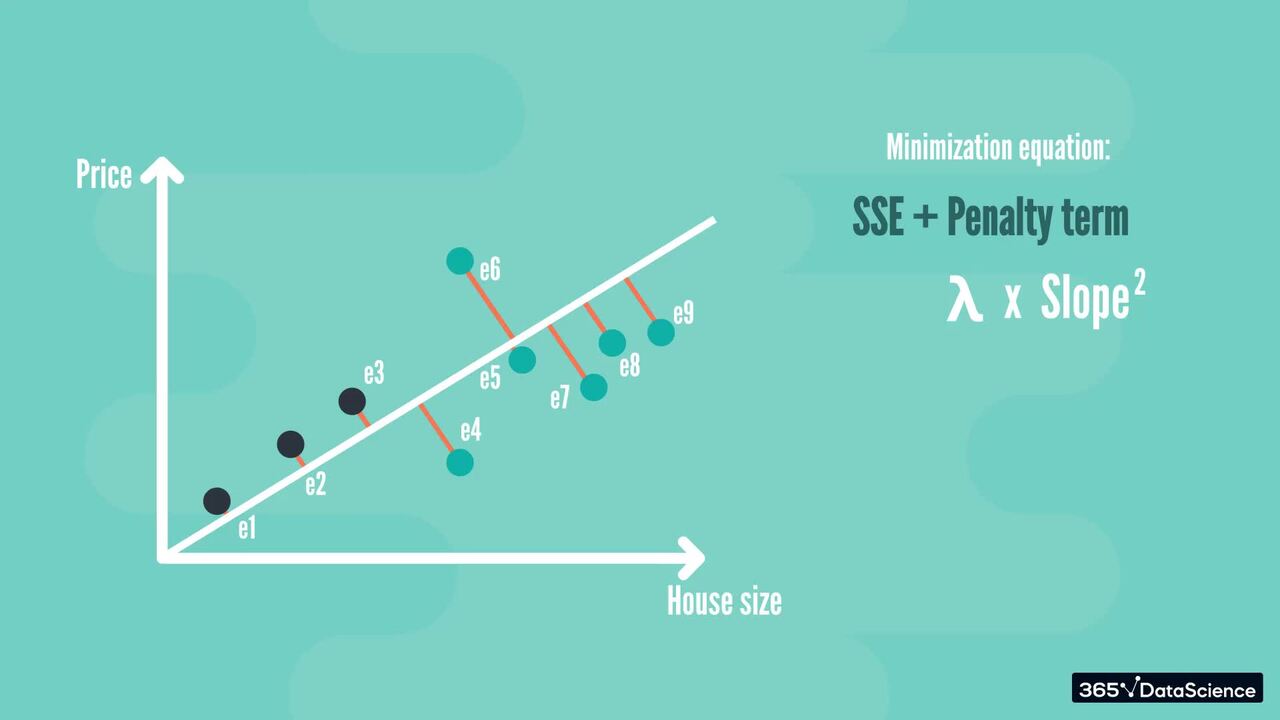

1.5 Introduction to Regularization

3 min

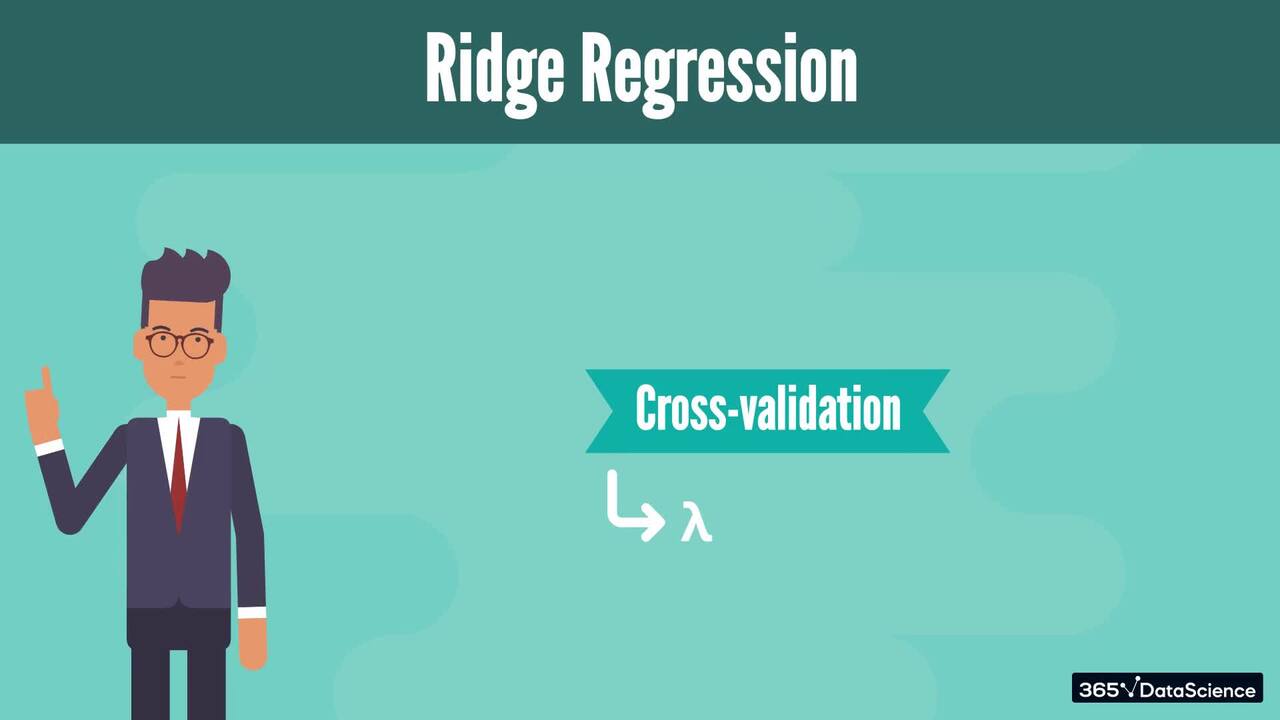

1.6 Ridge Regression Basics

6 min

1.8 Ridge Regression Mechanics

6 min

Curriculum

- 2. Setting Up The Environment2 Lessons 4 MinIf you’re new to programming with Python, we recommend going through our Introduction to Jupyter course which details installing Anaconda and Jupyter and features a tour of the Jupyter Environment. Here, we talk about the required packages for applying ridge and lasso regression in Python.Setting Up The Environment Read now1 minImporting the Relevant Packages3 min

- 3. Ridge and Lasso Regression – Practical Case8 Lessons 37 MinIn this section, we will walk you through the implementation of ridge and lasso regression using sk-learn in Python. We apply these methods to a real dataset in order to increase the performance of a regression algorithm by preventing overfitting. Furthermore, we demonstrate how regularization works and uncover the differences between ridge and lasso models.The Hitters Dataset: Preprocessing and Preparation6 minExploratory Data Analysis6 minPerforming Linear Regression8 minCross-validation for Choosing a Tuning Parameter3 minPerforming Ridge Regression with Cross-validation5 minPerforming Lasso Regression with Cross-validation3 minComparing the Results4 minReplacing the Missing Values in the DataFrame2 min

Topics

Course Requirements

- You need to complete an introduction to Python before taking this course

- Basic skills in statistics, probability, and linear algebra are required

- It is highly recommended to take the Machine Learning in Python course first

- You will need to install the Anaconda package, which includes Jupyter Notebook

Who Should Take This Course?

Level of difficulty: Intermediate

- Aspiring data scientists and ML engineers

Exams and Certification

A 365 Data Science Course Certificate is an excellent addition to your LinkedIn profile—demonstrating your expertise and willingness to go the extra mile to accomplish your goals.

Meet Your Instructor

Ivan has a background in sound engineering, as well as information technologies and communications. He has experience in the media industry as a location sound engineer, contributing to high-profile TV shows and films, which has given him a unique perspective on technology, human relations, and innovation. He believes that the value of data is growing rapidly and will soon become the world’s most valuable commodity. Ivan is passionate about data analysis, data collection, Python programming, artificial intelligence, and sound information retrieval. His interests also extend to signal processing, sound design, acoustics, and music. He sees these fields as deeply interconnected and strives to maintain a balance between science and art in his work.

What Our Learners Say

365 Data Science Is Featured at

Our top-rated courses are trusted by business worldwide.