Emad S.

See all reviews

Skill level:

Duration:

CPE credits:

Accredited

Bringing real-world expertise from leading global companies

Bachelor's degree, Data Science

Bringing real-world expertise from leading global companies

Master's degree, Computer Science

Description

Curriculum

Free lessons

1.1 Introduction

2 min

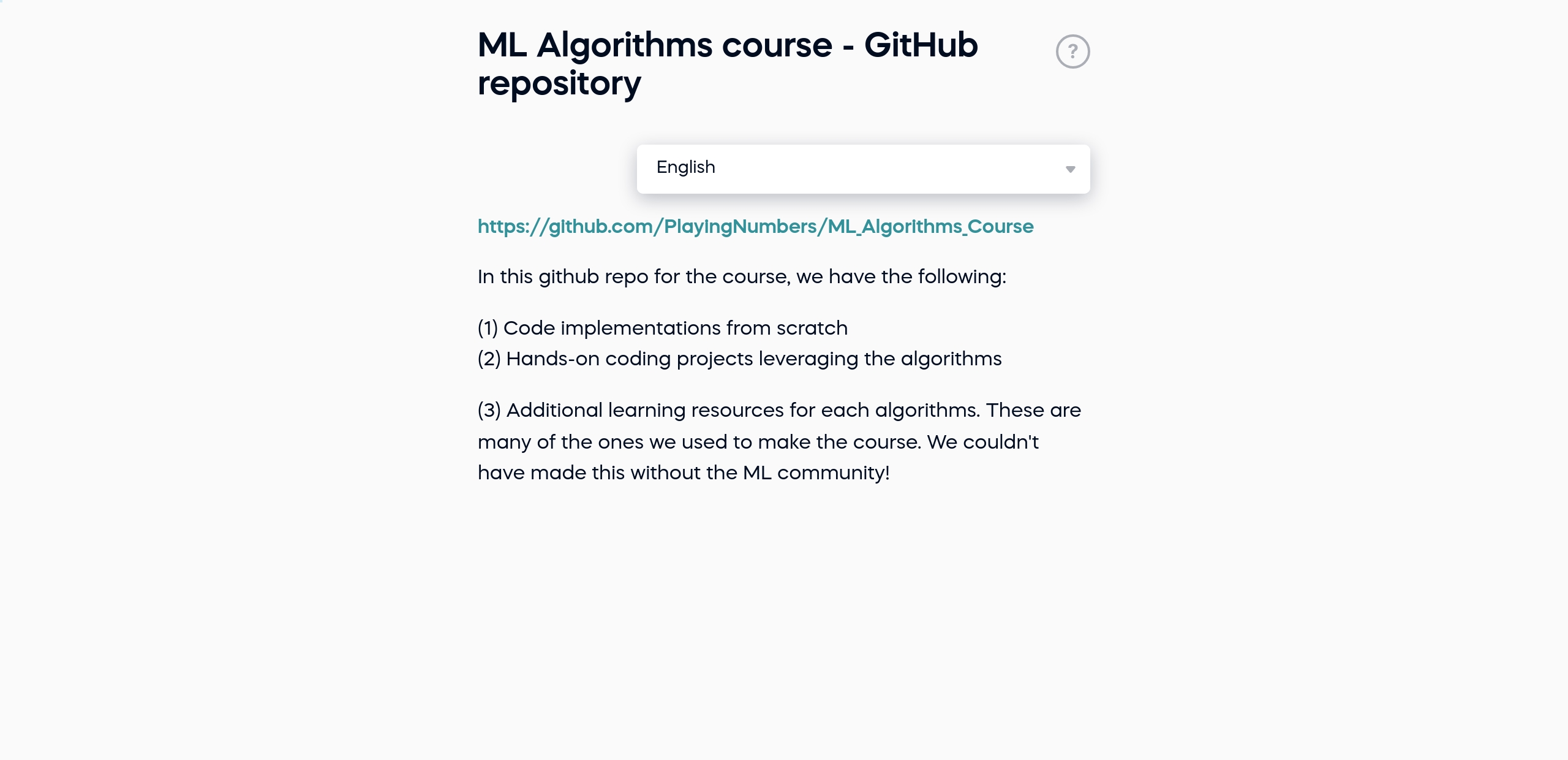

1.2 ML Algorithms course - GitHub repository

1 min

1.3 How to Use this Course

1 min

1.4 Types of ML Problems

1 min

1.6 Additional Resources

1 min

2.1 Linear Regression

1 min

94%

of AI and data science graduates

successfully change

#1 most reviewed

96%

of our students recommend

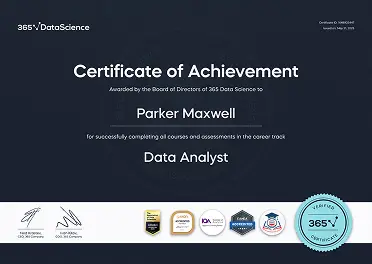

ACCREDITED certificates

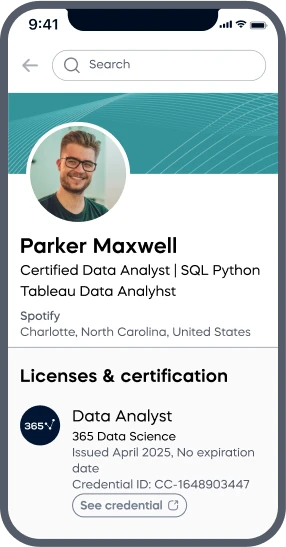

Craft a resume and LinkedIn profile you’re proud of—featuring certificates recognized by leading global

institutions.

Earn CPE-accredited credentials that showcase your dedication, growth, and essential skills—the qualities

employers value most.

Certificates are included with the Self-study learning plan.

How it WORKS