In our last two tutorials, we talked about requests headers and how you can scrape data locked behind a login. But how can you limit your rate of requests that you send to a particular server?

Now, I can almost hear you asking why you need to reduce the number of requests when scraping in the first place.

Let me explain.

Why Limit Your Rate of Requests?

First, let’s consider the matter from an ethical point of view. Your program should be respectful to the site owner.

Remember that every time you load a web page, you're making a request to a server. When you're just a human with a browser, there's not much damage you can do.

With a Python script, however, you can execute thousands of requests a second, intentionally or unintentionally. The server then needs to process every request individually. This, combined with the normal user traffic, can result in overloading the server. And this overload can manifest in slowing down the website or even bringing it down altogether.

Such a situation usually degrades the experience of real users and can cost the website owner valuable customers.

Obviously, we don’t want that. In fact, if done intentionally, this is considered a crime – the so-called DDOS attack (Deliberate Denial of Service), so we better avoid it.

Given the potential damage this easy technique can do, servers have started employing automatic defense mechanisms against it.

One form of such protection against spammers may be to temporarily block a user from the service if they detect a big amount of activity in a short period of time. This is often implemented at various levels, including IP address blocking at the firewall, or rate-limiting rules in the server configuration, and may even involve the DNS provider preventing the resolution of the site's domain from the offender's IP address.

So, even if you are not sending huge numbers of requests, you may get blocked as a preventive measure. And that’s precisely why it is important to know how to limit your rate of requests.

How to Limit Your Rate of Requests When Scraping?

Let’s see how to do this in Python. It is actually very easy.

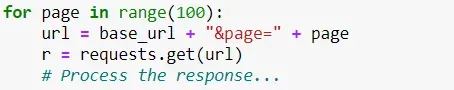

Suppose you have a setup with a “for loop” in which you make a request every iteration, like this:

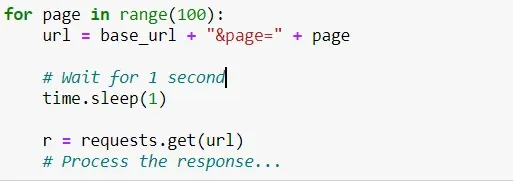

Depending on the other actions you take in the loop, this can iterate extremely fast. So, in order to make it slower, we will simply tell Python to wait a certain amount of time. To achieve this, we are going to use the time library.

![]()

It has a function, called sleep that “sleeps” the program for the specified number of seconds. So, if we want to have at least 1 second between each request, we can have the sleep function in the for loop, like this:

This way, before making a request, Python would always wait 1 second. That’s how we will avoid getting blocked and proceed with scraping the webpage.

So, this is one more web scraping roadblock you now know how to deal with.

I hope this tutorial will help you with your tasks and web scraping projects.

Eager to scrape data like a pro? Check out the 365 Web Scraping and API Fundamentals in Python Course.

The course is part of the 365 Data Science Program. You can explore the curriculum or sign up for 12 hours of beginner to advanced video content for free by clicking on the button below.