Decision trees are a technique that facilitates problem-solving by guiding you toward the right questions you need to ask in order to obtain the most valuable results. In Machine Learning decision tree models are renowned for being easily interpretable and transparent, while also packing a serious analytical punch. Random forests build upon the productivity and high-level accuracy of this model by synthesizing the results of many decision trees via a majority voting system. In this article, we will explore what decision trees and random forests are in machine learning, how they relate to each other, how they work in practice, and what tasks we can solve with them.

Table of Contents:

- What Are Decision Trees?

- Decision Trees in Machine Learning

- What Are Decision Trees Used for?

- What Are Random Forests?

- How Do Random Forests Work?

- Machine Learning with Decision Trees and Random Forests: Next Steps

What Are Decision Trees?

Decision Trees are a common occurrence in many fields, not just machine learning. In fact, we often use this data structure in operations research and decision analysis to help identify the strategy that is most likely to reach a goal. The idea is that there are different questions a person might ask about a particular problem, with branching answers that lead to other questions and respective answers, until they can reach a final decision.

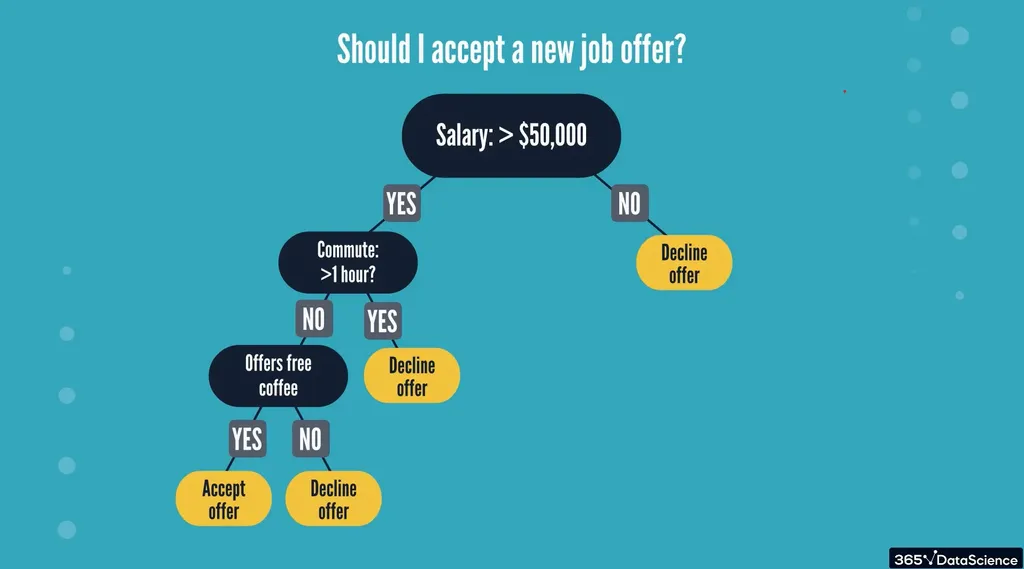

Let’s look at a very simple example of a decision tree.

You would like to make a decision tree in order to decide whether to accept a new job offer or not. In that case, there might be several important questions you may ask yourself. The first one might be about the paycheck – is the salary more than 50k a year? If the answer is no, you can decline the offer. And if the answer is yes, well… That’s a good sign! But there are still important questions left to ask, such as: Is the commute more than an hour? If that’s the case, you might want to decline the offer as the investment of your time is not worth it.

On the other hand, if the commute is less than an hour, you might wonder whether there are any additional perks, such as free coffee. If there are no such things, you might as well look for a different job opening. But if there are, then you can accept this offer.

Now, if we inspect our newly created tree, we’ll see that its nodes correspond to questions regarding the problem at hand, while the branches symbolize possible answers – most commonly, those would be a binary Yes or No. Finally, the leaf nodes are the actual outcome, or decision, of the tree. There, our tree ends. And that’s the general structure of all decision trees.

Decision Trees in Machine Learning

Decision tree models can be used in machine learning to make predictions based on well-crafted questions about their input features. An ML algorithm generates and trains the given tree based on the provided dataset. This training consists of choosing the right questions about the right features placed in the right spot on the tree. the algorithms can work with both categorical and numerical data, and produce classification and regression trees as well. Here are the names of the more popular ones:

ID3, C4.5, CART, CHAID, and MARS

As you can imagine, these tree-generating algorithms have a complicated task. Let’s say you had 2 features in your dataset –the salary and the commute from our job offer example. Even though there’s such a small number of features, there can be hundreds of different decision trees they can result in, as you have to consider what is the right question to ask about those features. For example, whether the salary is greater than 50,000 dollars, or perhaps greater than 30,000. A third option would be the range from 40,000 to 60,000 dollars.

You then have to consider what to do with the answer to this question – if the answer is no, do you decline the offer straight away or is the commute time also relevant?

Another factor to consider is the hierarchy. Trees are carefully structured, with the questions near the top being more important than those near the bottom of the tree. So, the algorithm should look into what feature it should test first, and so on.

Given all of this, it is only natural that there are a lot of possibilities – especially if we were to consider more than 2 features. An algorithm’s job is to choose the tree with the best outcome in relation to the dataset provided.

What Are Decision Trees Used for?

To the naked eye, decision trees might look simple at first – in fact, they may look way too simple to be remotely useful. After all, why would we want such a model if we can create a complex neural network that will wield us better results, right? The answer is that, in certain cases, the simplicity of decision trees is exactly what makes them useful. Let’s see how.

- Decision trees are a white-box model. This means that they are simple to understand and interpret, compared to black-box models. You probably have heard that a major problem of neural networks is that they do what they do, but it is very difficult for a researcher or a data scientist to understand the underlying logic. And if you don’t understand how your model comes to a certain decision, it is a slippery slope trying to improve on that model. After all, understanding the inner workings is the foundation of science. And decision trees give us just that.The whole point of a decision tree is to guide you through the process of obtaining the result. Using this method, data scientists can see everything neatly laid out in front of them in graphical form.

- Decision tree models can have an in-built feature selection. The tree itself is a hierarchical structure where the root is the most important node, and the significance of each subsequent node diminishes the farther down the tree we go. So, often the first few features to appear are the only ones we actually care about. Moreover, we can use this information for many purposes, not strictly related to the problem at hand.

- They require little to no preprocessing of the data. Trees don’t require scaling, standardizing, or normalizing your data as, generally speaking, they are not performing calculations on the numbers themselves. They do not train on minibatches, as most neural networks do, so there’s no need to shuffle and split the data either. We save ourselves a lot of work, especially on a new dataset.

If you’d like to learn more about data preprocessing techniques, check out our dedicated tutorial.

- They display a high level of computational complexity. For complex models like neural networks, the training times can grow exponentially fast. You blink twice and suddenly need one of the Google supercomputers to be able to train your network in a reasonable amount of time. Given that decision trees are a relatively simple model, they tend to perform well on large datasets. Therefore, there’s no need for huge training times. But their biggest performance gain is in the prediction phase. At that point, the actual device that uses the trees only needs to go through a small part of them, as large subtrees are cut away after every wrong answer. For this reason, decision trees are a good option for limited resource platforms, such as mobile.

What Are Random Forests?

As the name suggests, a forest is a collection of many trees. And that’s true for random forests as well – they are a collection of many individual decision trees. In machine learning, this is referred to as ensemble modelling. In general, ensemble methods use multiple learning algorithms to obtain better predictive performance than any of the constituent learning algorithms alone. So, in our case, the collection of decision trees as a whole unit behaves much better than any stand-alone decision tree. In short, random forests rely on combining the results of many different decision trees.

The random forest algorithm is one of the few non-neural network models that give very high accuracy for both regression and classification tasks. It simply gives good results. And while decision trees do provide great interpretability, when it comes down to performance, they lose against random forests. In fact, unless transparency of the model is a priority, almost every data scientist and analyst will use random forests over decision trees.

How Do Random Forests Work?

Through a process called bootstrapping, we can create many different datasets that share the general correlation of the original dataset. And what can we do with similar, though slightly different datasets? Well, we can train ML algorithms with them and obtain similar, yet slightly different models.

Since we are talking about random forests, those models would be, of course, decision trees. And so, we have, let’s say, a hundred decision trees trained on a hundred bootstrap samples. What do we do with them?

Well, we aggregate them. In other words, we combine all the different outputs into one, usually through a majority voting system. Therefore, the most common output among the different decision trees is the one we consider the final output.

So, for example, if 30 models say that the input corresponds to a rocket, 26 say it is a plane, and 44 determine that it corresponds to a car, the final output would be a car.

That ensemble is called Bagged decision trees – “Bagged” stemming from Bootstrap Aggregating. The advantages of having many different trees mainly come in the form of reducing overfitting. Since we have different data for the different trees, it is harder for them to model every individual datapoint, which is what overfitting is. However, there’s something more this collection needs to become a random forest.

Random forests employ one additional crucial detail – they don’t consider all the features at once. Just as every decision tree is trained with a different dataset, it is also trained with a random subset of the input features.

Machine Learning with Decision Trees and Random Forests: Next Steps

Now that we’ve covered the fundamentals of decision trees and random forests, you can dive deeper into the topic by exploring the finer differences in their implementation. In order to fully grasp how these algorithms work, the logical next steps would be to understand the mechanisms of pruning and bootstrapping. The latter in particular will help you understand the relationship between single decision trees and complex random forests. With these ground concepts out of the way, you can then jump into building such predictive models in Python.

If you’re fascinated by the elegance and efficiency of these two models, check out our course on Machine Learning with Decision Trees and Random Forests. With these must-have algorithms under your belt, you won’t just be able to build powerful predictors but also understand, communicate, and visualize the results of your machine learning workflow.