Data science – a universally recognizable term that is in desperate need of dissemination.

Data Science is a term that escapes any single complete definition, which makes it difficult to use, especially if the goal is to use it correctly. Most articles and publications use the term freely, with the assumption that it is universally understood. However, data science – its methods, goals, and applications – evolve with time and technology. Data science 25 years ago referred to gathering and cleaning datasets then applying statistical methods to that data.

In 2018, data science has grown to a field that encompasses data analysis, predictive analytics, data mining, business intelligence, machine learning, and so much more.

In fact, because no one definition fits the bill seamlessly, it is up to those who do data science to define it.

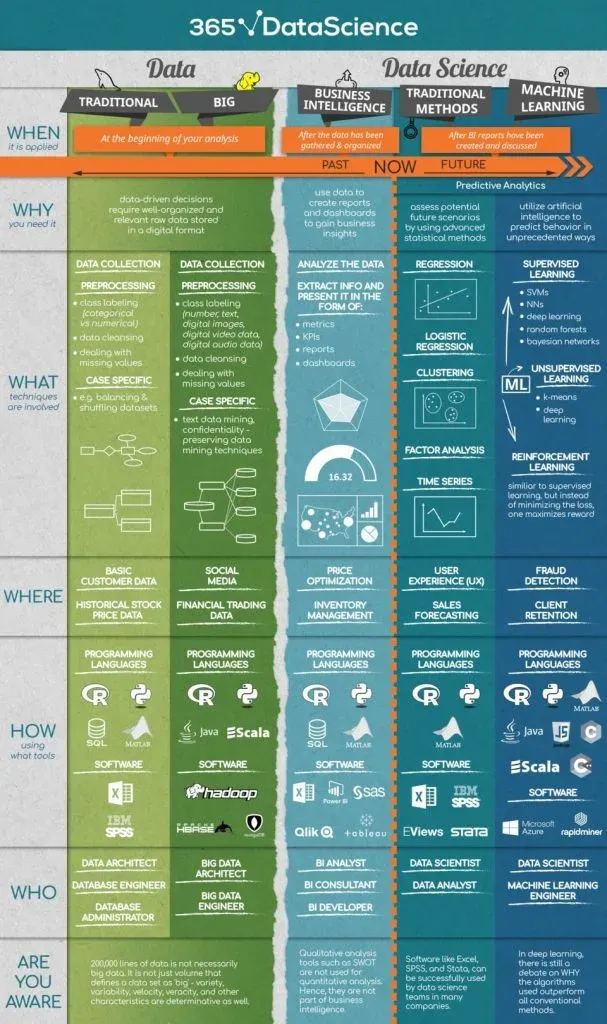

Recognizing the need for a clear-cut explanation of data science, the 365 Data Science Team designed the What-Where-Who infographic. We define the key processes in data science and disseminate the field. Here is our interpretation of data science.

Of course, this might look like a lot of overwhelming information, but it really isn’t. In this article, we will take data science apart and we will build it back up to a coherent and manageable concept. Bear with us!

Data science, 'explained in under a minute', looks like this.

You have data. To use this data to inform your decision-making, it needs to be relevant, well-organized, and preferably digital. Once your data is coherent, you proceed with analyzing it, creating dashboards and reports to understand your business’s performance better. Then you set your sights on the future and start generating predictive analytics. With predictive analytics, you assess potential future scenarios and predict consumer behavior in creative ways. Here's our Research into 1,001 Data Scientists in which you can find interesting information on the topic.

Author's note: You can learn more about how data science and business interact in our article 5 Business Basics for Data Scientists.

But let’s start at the beginning.

The Data in Data Science

Before anything else, there is always data. Data is the foundation of data science; it is the material on which all the analyses are based. In the context of data science, there are two types of data: traditional and big data.

Traditional data is data that is structured and stored in databases that analysts can manage from one computer; it is in table format, containing numeric or text values. Actually, the term “traditional” is something we are introducing for clarity. It helps emphasize the distinction between big data and other types of data.

Big data, on the other hand, is… bigger than traditional data, and not in the trivial sense. From variety (numbers, text, but also images, audio, mobile data, etc.), to velocity (retrieved and computed in real-time), to volume (measured in tera-, peta-, exa-bytes), big data is usually distributed across a network of computers.

That said, let’s define the What-Where-and-Who in data science each is characterized by. Our course Data Literacy gives us a deep understanding of data and its intricacies for anyone who wants excel in working with various types and volumes of information.

What do you do to Data in Data Science?

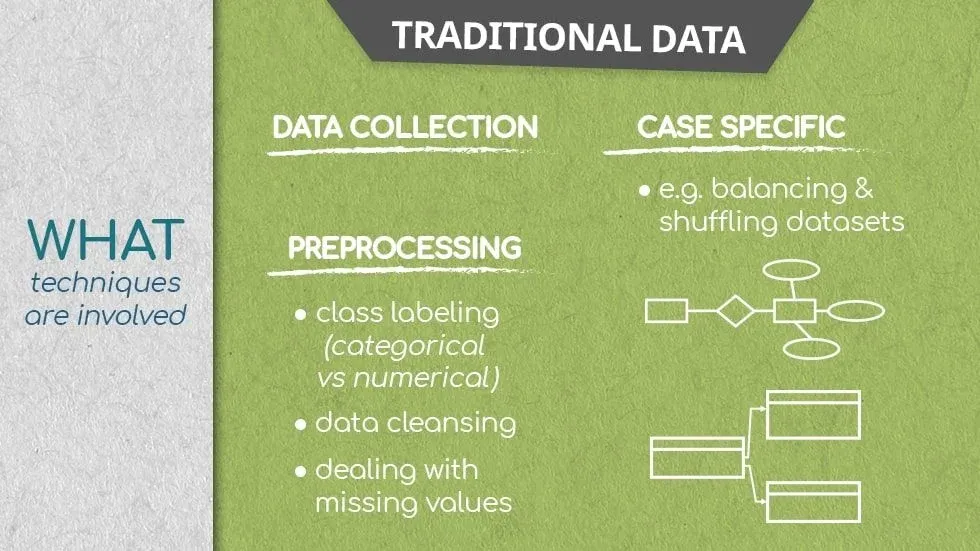

Traditional Data in Data Science

Traditional data is stored in relational database management systems.

That said, before being ready for processing, all data goes through pre-processing. This is a necessary group of operations that convert raw data into a format that is more understandable and hence, useful for further processing. Here are a few processes ou must be familiar with.

Collect raw data and store it on a server

This is untouched data that scientists cannot analyze straight away. This data can come from surveys, or through the more popular automatic data collection paradigm, like cookies on a website.

Class-label the observations

This consists of arranging data by category or labeling data points to the correct data type. For example, numerical, or categorical.

Data cleansing/data scrubbing

It's about dealing with inconsistent data, like misspelled categories and missing values.

Data balancing

If the data is unbalanced such that the categories contain an unequal number of observations and are thus not representative, applying data balancing methods, like extracting an equal number of observations for each category, and preparing that for processing, fixes the issue.

Data shuffling

Re-arranging data points to eliminate unwanted patterns and improve predictive performance further on. This is applied when, for example, if the first 100 observations in the data are from the first 100 people who have used a website; the data isn’t randomised, and patterns due to sampling emerge.

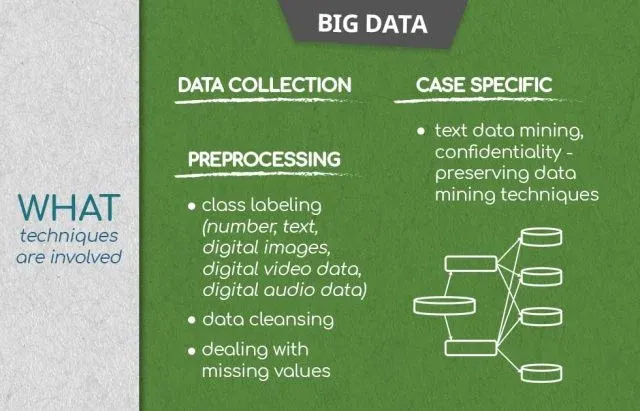

Big Data in Data Science

When it comes to big data and data science, there is some overlap of the approaches used in traditional data handling, but there are also a lot of differences.

First of all, big data is stored on many servers and is infinitely more complex.

In order to do data science with big data, pre-processing is even more crucial, as the complexity of the data is a lot larger. You will notice that conceptually, some of the steps are similar to traditional data pre-processing, but that’s inherent to working with data.

Collect and Class-Label the Data

Keep in mind that big data is extremely varied, therefore instead of ‘numerical’ vs ‘categorical’, the labels are ‘text’, ‘digital image data’, ‘digital video data’, digital audio data’, and so on.

Data Cleansing

The methods here are massively varied, too; for example, you can verify that a digital image observation is ready for processing; or a digital video, or…

Data Masking

When collecting data on a mass scale, this aims to ensure that any confidential information in the data remains private, without hindering the analysis and extraction of insight. The process involves concealing the original data with random and false data, allowing the scientist to conduct their analyses without compromising private details. Naturally, the scientist can do this to traditional data too, and sometimes is, but with big data the information can be much more sensitive, which masking a lot more urgent.

Where does data come from?

Traditional data may come from basic customer records or historical stock price information.

Big data, however, is all around us. A consistently growing number of companies and industries use and generate big data. Consider online communities, for example, Facebook, Google, and LinkedIn; or financial trading data. Temperature measuring grids in various geographical locations also amount to big data, as well as machine data from sensors in industrial equipment. And, of course, wearable tech.

Who handles the data?

The data specialists who deal with raw data and pre-processing, with creating databases, and maintaining them can go by a different name. But although their titles are similar sounding, there are palpable differences in the roles they occupy. Consider the following.

Data Architects and Data Engineers (and Big Data Architects, and Big Data Engineers, respectively) are crucial in the data science market.

The former creates the database from scratch; they design the way data will be retrieved, processed, and consumed. Consequently, the data engineer uses the data architects’ work as a stepping stone and processes (pre-processes) the available data. They are the people who ensure the data is clean and organized and ready for the analysts to take over.

The Database Administrator, on the other hand, is the person who controls the flow of data into and from the database. Of course, with Big Data almost the entirety of this process is automated, so there is no real need for a human administrator. The Database Administrator deals mostly with traditional data.

That said, once data processing is done, and the databases are clean and organised, the real data science begins.

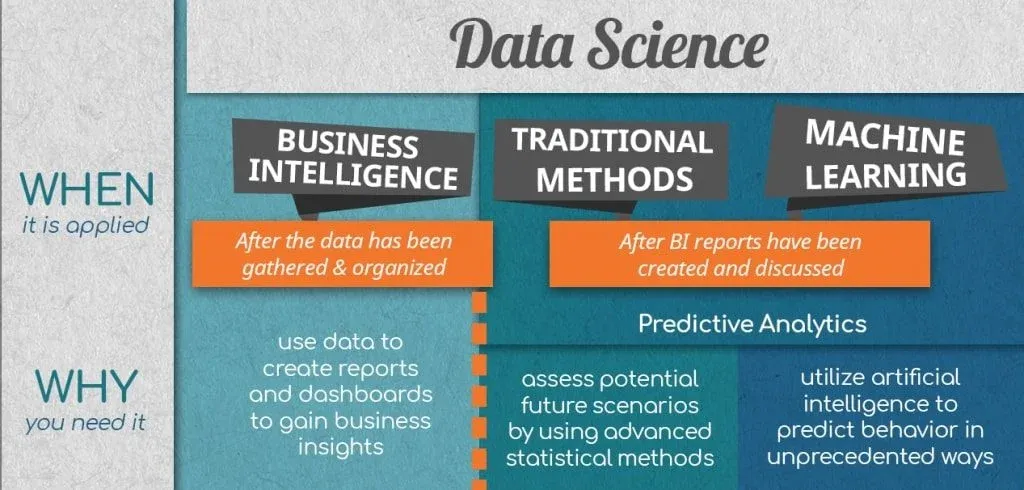

Data Science

There are also two ways of looking at data: with the intent to explain behaviour that has already occurred, and you have gathered data for it; or to use the data you already have in order to predict future behaviour that has not yet happened.

Data Science explaining the past

Business Intelligence

Before data science jumps into predictive analytics, it must look at the patterns of behaviour the past provides, analyse them to draw insight and inform the path for forecasting. Business intelligence focuses precisely on this: providing data-driven answers to questions like:

How many units were sold? In which region were the most goods sold? Which type of goods sold where? How did the email marketing perform last quarter in terms of click-through rates and revenue generated? How does that compare to the performance in the same quarter of last year?

Although Business Intelligence does not have “data science” in its title, it is part of data science, and not in any trivial sense.

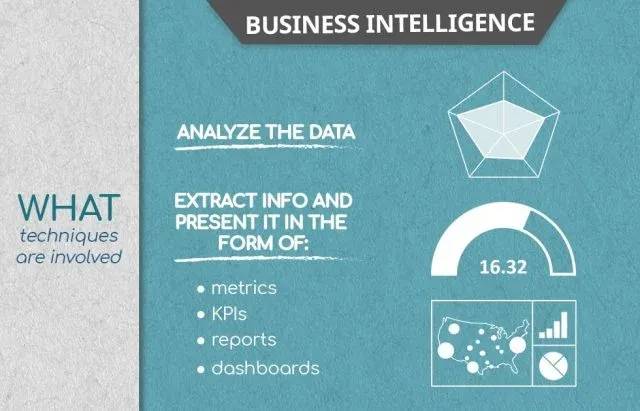

What does Business Intelligence do?

Of course, Business Intelligence Analysts can apply Data Science to measure business performance. But in order for the Business Intelligence Analyst to achieve that, they must employ specific data handling techniques.

The starting point of all data science is data. Once the relevant data is in the hands of the BI Analyst (monthly revenue, customer, sales volume, etc.), they must quantify the observations, calculate KPIs and examine measures to extract insights from their data.

Data Science is about telling a story

Apart from handling strictly numerical information, data science, and specifically business intelligence, is about visualizing the findings, and creating easily digestible images supported only by the most relevant numbers. After all, all levels of management should be able to understand the insights from the data and inform their decision-making.

Business intelligence analysts create dashboards and reports, accompanied by graphs, diagrams, maps, and other comparable visualisations to present the findings relevant to the current business objectives.

To find out more about data visualization, check out this article on chart types or go to our tutorials How to Visualize Numerical Data with Histograms and Vizualizing Data with Bar, Pie and Pareto Charts.

Where is business intelligence used?

Price optimisation and data science

Notably, analysts apply data science to inform things like price optimisation techniques. They extract the relevant information in real time, compare it with historicals, and take actions accordingly. Consider hotel management behaviour: management raise room prices during periods when many people want to visit the hotel and reduce them when the goal is to attract visitors in periods with low demand.

Inventory management and data science

Data science, and business intelligence, are invaluable for handling over and undersupply. In-depth analyses of past sales transactions identify seasonality patterns and the times of the year with the highest sales, which results in the implementation of effective inventory management techniques that meet demands at minimum cost.

Who does the BI branch of data science?

A BI analyst focuses primarily on analyses and reporting of past historical data.

The BI consultant is often just an ‘external BI analysts’. Many companies outsource their data science departments as they don’t need or want to maintain one. BI consultants would be BI analysts had they been employed, however, their job is more varied as they hop on and off different projects. The dynamic nature of their role provides the BI consultant with a different perspective, and whereas the BI Analyst has highly specialized knowledge (i.e., depth), the BI consultant contributes to the breadth of data science.

The BI developer is the person who handles more advanced programming tools, such as Python and SQL, to create analyses specifically designed for the company. It is the third most frequently encountered job position in the BI team.

Data Science predicting the future

Predictive analytics in data science rest on the shoulders of explanatory data analysis, which is precisely what we were discussing up to this point. Once the BI reports and dashboards have been prepared and insights – extracted from them – this information becomes the basis for predicting future values. And the accuracy of these predictions lies in the methods used.

Recall the distinction between traditional data and big data in data science.

We can make a similar distinction regarding predictive analytics and their methods: traditional data science methods vs. Machine Learning. One deals primarily with traditional data, and the other – with big data.

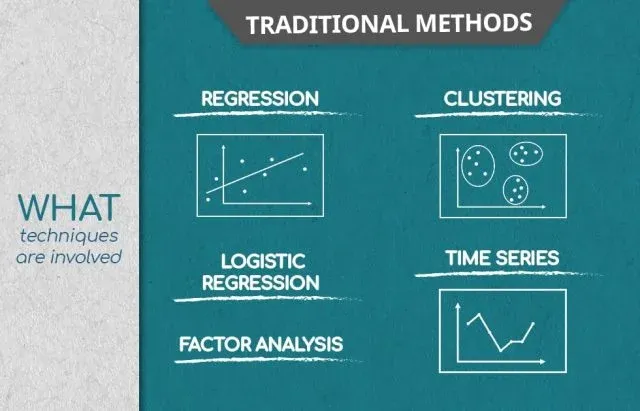

Traditional forecasting methods in Data Science: What are they?

Traditional forecasting methods comprise the classical statistical methods for forecasting – linear regression analysis, logistic regression analysis, clustering, factor analysis, and time series. The output of each of these feeds into the more sophisticated machine learning analytics, but let’s first review them individually.

A quick side-note. Some in the data science industry refer to several of these methods as machine learning too, but in this article machine learning refers to newer, smarter, better methods, such as deep learning.

Linear regression

In data science, the linear regression model is used for quantifying causal relationships among the different variables included in the analysis. Like the relationship between house prices, the size of the house, the neighborhood, and the year built. The model calculates coefficients with which you can predict the price of a new house, if you have the relevant information available.

If you're curious about the geometrical representation of the simple linear regression model, check out the linked tutorial.

Logistic regression

Since it's not possible to express all relationships between variables as linear, data science makes use of methods like the logistic regression to create non-linear models. Logistic regression operates with 0s and 1s. Companies apply logistic regression algorithms to filter job candidates during their screening process. If the algorithm estimates that the probability that a prospective candidate will perform well in the company within a year is above 50%, it would predict 1, or a successful application. Otherwise, it will predict 0.

Cluster analysis

This exploratory data science technique is applied when the observations in the data form groups according to some criteria. Cluster analysis takes into account that some observations exhibit similarities, and facilitates the discovery of new significant predictors, ones that were not part of the original conceptualisation of the data.

Factor analysis

If clustering is about grouping observations together, factor analysis is about grouping features together. Data science resorts to using factor analysis to reduce the dimensionality of a problem. For example, if in a 100-item questionnaire each 10 questions pertain to a single general attitude, factor analysis will identify these 10 factors, which can then be used for a regression that will deliver a more interpretable prediction. A lot of the techniques in data science are integrated like this.

Time series analysis

Time series is a popular method for following the development of specific values over time. Experts in economics and finance use it because their subject matter is stock prices and sales volume – variables that are typically plotted against time.

Where does data science find application for traditional forecasting methods?

The application of the corresponding techniques is extremely broad; data science is finding a way into an increasingly large number of industries. That said, two prominent fields deserve to be part of the discussion.

User experience (UX) and data science

When companies launch a new product, they often design surveys that measure the attitudes of customers towards that product. Analysing the results after the BI team has generated their dashboards includes grouping the observations into segments (e.g. regions), and then analysing each segment separately to extract meaningful predictive coefficients. The results of these operations often corroborate the conclusion that the product needs slight but significantly different adjustments in each segment in order to maximise customer satisfaction.

Forecasting sales volume

This is the type of analysis where time series comes into play. Sales data has been gathered until a certain date, and the data scientist wants to know what is likely to happen in the next sales period, or a year ahead. They apply mathematical and statistical models and run multiple simulations; these simulations provide the analyst with future scenarios. This is at the core of data science, because based on these scenarios, the company can make better predictions and implement adequate strategies.

Who uses traditional forecasting methods?

The data scientist. But bear in mind that this title also applies to the person who employs machine learning techniques for analytics, too. A lot of the work spills from one methodology to the other.

The data analyst, on the other hand, is the person who prepares advanced types of analyses that explain the patterns in the data that have already emerged and overlooks the basic part of the predictive analytics. Of course, if you're eager to learn more details about what a data scientist does and how their job compares to other career paths in the data science field, read our ultimate guide on how to start a career in data science.

Machine Learning and Data Science

Machine learning is the state-of-the-art approach to data science. And rightly so.

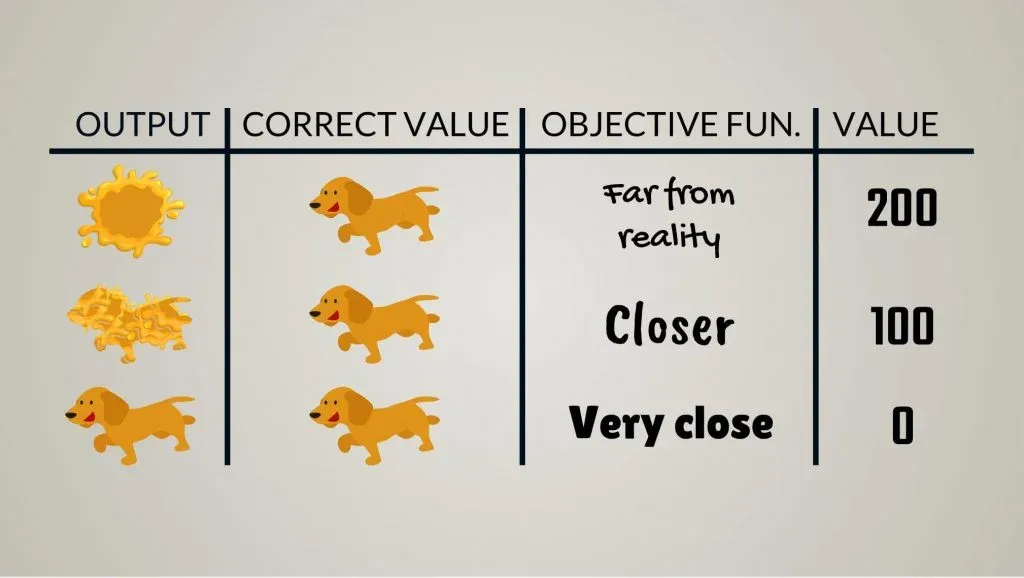

The main advantage machine learning has over any of the traditional data science techniques is the fact that at its core resides the algorithm. These are the directions a computer uses to find a model that fits the data as well as possible. The difference between machine learning and traditional data science methods is that we do not give the computer instructions on how to find the model; it takes the algorithm and uses its directions to learn on its own how to find said model. Unlike in traditional data science, machine learning needs little human involvement. In fact, machine learning, especially deep learning algorithms are so complicated, that humans cannot genuinely understand what is happening “inside”.

What is machine learning in data science?

A machine learning algorithm is like a trial-and-error process, but the special thing about it is that each consecutive trial is at least as good as the previous one. But bear in mind that in order to learn well, the machine has to go through hundreds of thousands of trial-and-errors, with the frequency of errors decreasing throughout.

Once the training is complete, the machine will be able to apply the complex computational model it has learned to novel data still to the result of highly reliable predictions.

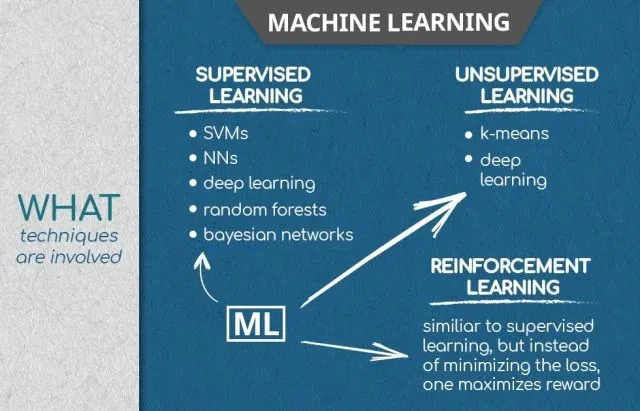

There are three major types of machine learning: supervised, unsupervised, and reinforcement learning.

Supervised learning

Supervised learning rests on using labeled data. The machine gets data that is associated with a correct answer; if the machine’s performance does not get that correct answer, an optimization algorithm adjusts the computational process, and the computer does another trial. Bear in mind that, typically, the machine does this on 1000 data points at once.

Support vector machines, neural networks, deep learning, random forest models, and Bayesian networks are all instances of supervised learning.

Unsupervised learning

When the data is too big, or the data scientist is under too much pressure for resources to label the data, or they do not know what the labels are at all, data science resorts to using unsupervised learning. This consists of giving the machine unlabeled data and asking it to extract insights from it. This often results in the data being divided in a certain way according to its properties. In other words, it is clustered.

Unsupervised learning is extremely effective for discovering patterns in data, especially things that humans using traditional analysis techniques would miss.

Data science often makes use of supervised and unsupervised learning together, with unsupervised learning labelling the data, and supervised learning finding the best model to fit the data. One instance of this is semi-supervised learning.

Reinforcement learning

This is a type of machine learning where the focus is on performance (to walk, to see, to read), instead of accuracy. Whenever the machine performs better than it has before, it receives a reward, but if it performs sub-optimally, the optimization algorithms do not adjust the computation. Think of a puppy learning commands. If it follows the command, it gets a treat; if it doesn’t follow the command, the treat doesn’t come. Because treats are tasty, the dog will gradually improve in following commands. That said, instead of minimizing an error, reinforcement learning maximizes a reward.

Where is Machine Learning applied in the world of data science & business?

Fraud detection

With machine learning, specifically supervised learning, banks can take past data, label the transactions as legitimate, or fraudulent, and train models to detect fraudulent activity. When these models detect even the slightest probability of theft, they flag the transactions, and prevent the fraud in real time.

Client retention

With machine learning algorithms, corporate organizations can know which customers may purchase goods from them. This means the store can offer discounts and a ‘personal touch’ in an efficient way, minimizing marketing costs and maximizing profits. A couple of prominent names come to mind: Google, and Amazon.

Who uses machine learning in data science?

As mentioned above, the data scientist is deeply involved in designing machine algorithms, but there is another star on this stage.

The machine learning engineer. This is the specialist who is looking for ways to apply state-of-the-art computational models developed in the field of machine learning into solving complex problems such as business tasks, data science tasks, computer vision, self-driving cars, robotics, and so on.

Programming languages and Software in data science

Two main categories of tools are necessary to work with data and data science: programming languages and software.

Programming languages in data science

Knowing a programming language enables the data scientist to devise programs that can execute specific operations. The biggest advantage programming languages have is that we can reuse the programs created to execute the same action multiple times.

R, Python, and MATLAB, combined with SQL, cover most of the tools used when working with traditional data, BI, and conventional data science.

R and Python are the two most popular tools across all data science sub-disciplines. Their biggest advantage is that they can manipulate data and are integrated within multiple data and data science software platforms. They are not just suitable for mathematical and statistical computations; they are adaptable.

In fact, Python was deemed "the big Kahuna" of 2019 by IEEE (the world’s largest technical professional organization for the advancement of technology) and was listed at number 1 in its annual interactive ranking of the Top 10 Programming Languages. That said, if you want to learn everything about the most sought-after programming language, check out our all-encompassing Python Programming Guide.

SQL is king, however, when it comes to working with relational database management systems, because it was specifically created for that purpose.

SQL is at its most advantageous when working with traditional, historical data, for example when preparing a BI analysis.

MATLAB is the fourth most indispensable tool for data science. It is ideal for working with mathematical functions or matrix manipulations.

Big data in data science is handled with the help of R and Python, of course, but people working in this area are often proficient in other languages like Java or Scala. These two are very useful when combining data from multiple sources.

JavaScript, C, and C++, in addition to the ones mentioned above, are often employed when the branch of data science the specialist is working in involves machine learning. They are faster than R and Python and provide greater freedom.

Author's note: If you need to hone your programming skills, you can visit our Python tutorials and SQL tutorials.

Software in data science

In data science, the software or, software solutions, are tools adjusted for specific business needs.

Excel is a tool applicable to more than one category—traditional data, BI, and Data Science. Similarly, SPSS is a very famous tool for working with traditional data and applying statistical analysis.

Apache Hadoop, Apache Hbase, and Mongo DB, on the other hand, are software designed for working with big data.

Power BI, SaS, Qlik, and especially Tableau are top-notch examples of software designed for business intelligence visualizations.

In terms of predictive analytics, EViews is mostly used for working with econometric time-series models, and Stata—for academic statistical and econometric research, where techniques like regression, cluster, and factor analysis are constantly applied.

This is Data Science

Data science is a slippery term that encompasses everything from handling data – traditional or big – to explaining patterns and predicting behavior. Data science is done through traditional methods like regression and cluster analysis or through unorthodox machine learning techniques.

It is a vast field, and we hope you are one step closer to understanding how all-encompassing and intertwined with human life it is.

Ready to take the first step toward a career in data science?

Whether you are a complete beginner or a seasoned professional, you can check out our Data Scientist Career Track where you'll find valuable information on the latest trends in the field.